[ad_1]

Image created by the author using DALL-E 3

“Computers are like bicycles for our minds,” Steve Jobs once remarked. Let’s think about pedaling through the scenic landscape of Web Scraping with ChatGPT as your guide.

Along with its other excellent uses, ChatGPT can be your guide and companion in learning anything, including Web Scraping. And remember, we’re not just talking about learning Web Scraping; we’re talking about rethinking how we learn it.

Buckle up for sections that stitch curiosity with code and explanations. Let’s get started.

Here, we need a great plan. Web Scraping can serve you in doing novel Data Science projects that will attract employers and may help you finding your dream job. Or you can even sell the data you scrape. But before all of this, you should make a plan. Let’s explore what I’m talking about.

First Thing First : Let’s Make a Plan

Albert Einstein once said, ‘If I had an hour to solve a problem, I’d spend 55 minutes thinking about the problem and five minutes thinking about solutions.’ In this example, we will follow his logic.

To learn Web Scraping, first, you should define which coding library to use it. For instance, if you want to learn Python for Data Science, you should break it down into subsections, such as:

- Web Scraping

- Data Exploration and Analysis

- Data Visualization

- Machine Learning

Like this, we can divide Web Scraping into subsections before doing our selection. We still have many minutes to spend. Here are the Web Scraping libraries;

- Requests

- Scrapy

- BeautifulSoup

- Selenium

Great, let’s say you’ve chosen BeautifulSoup. I would advise you to prepare an excellent content table. You can choose this content table from a book you found on the web. Let’s say your content table’s first two sections will be like this:

Title: Mastering Web Scraping with BeautifulSoup

Contents

Section 1: Foundations of Web Scraping

- Introduction to Web Scraping

- Getting Started with Python and BeautifulSoup

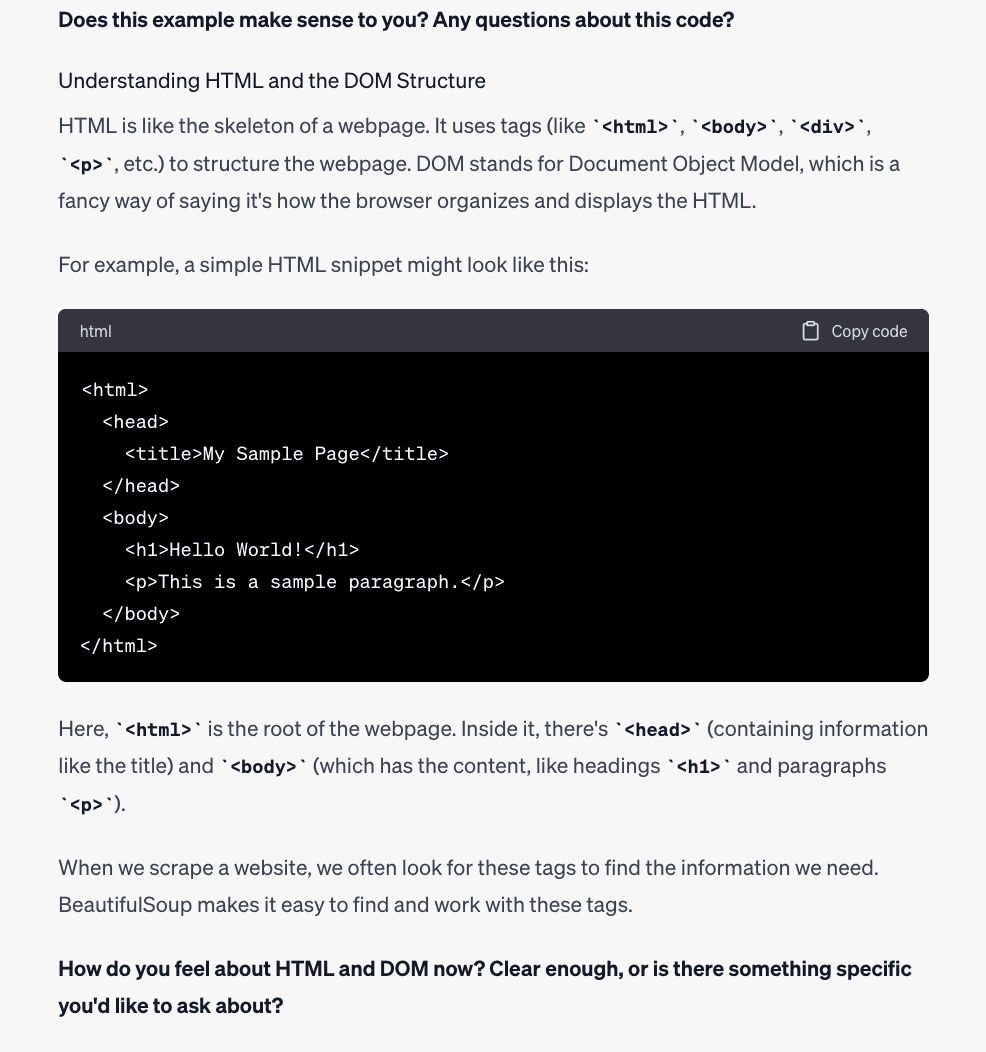

- Understanding HTML and the DOM Structure

Section 2: Setting Up and Basic Techniques

- Setting Up Your Web Scraping Environment

- Basic Techniques in BeautifulSoup

Also, please don’t research the E-Book mentioned above as I created it just for this example.

Now, you have your content table. It’s time to follow your daily learning schedule. Let’s say today you want to learn Section 1. Here is the prompt you can use:

Act as a Python teacher and explain the following subsections to me, using coding examples. Keep the tone conversational and suitable for a 9th grade level, and assume I am a complete beginner. After each subsection, ask if I have understood the concepts and if I have any questions

Section 1: Foundations of Web Scraping

- Introduction to Web Scraping

- Getting Started with Python and BeautifulSoup

- Understanding HTML and the DOM Structure”

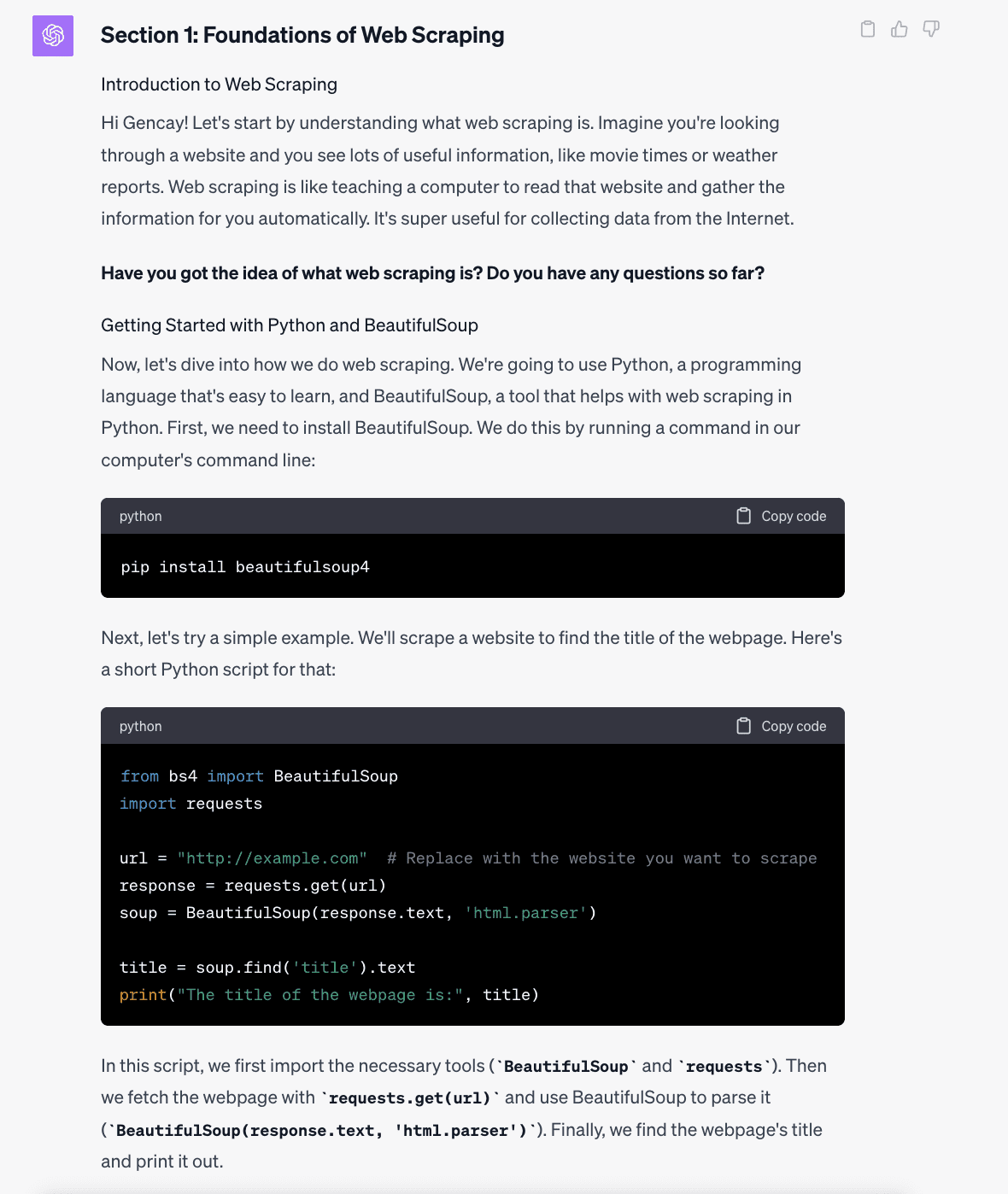

Here is the first section of the ChatGPT output. It explains concepts as if to a beginner, provides coding examples, and asks questions to check your understanding, which is cool. Let’s see the remaining part of its answer.

Great, now you understand it a bit better. As you can see from this example, it has already provided valuable information about Web Scraping. But let’s explore how it can assist you with more advanced applications.

Important Note: Be mindful of potential inaccuracies in ChatGPT’s responses. Always verify the information it provides afterward.

As you can see from the previous examples, once you have a solid plan, ChatGPT can be quite helpful in learning concepts, like Web Scraping. In this section, we will explore further applications of ChatGPT, such as debugging or improving your code.

Debug Your Code

Sometimes, debugging can be really difficult and time-consuming, and if you didn’t write the code correctly, you might spend a lot of time on it, as shown in the code below.

In the code below, we aim to scrape quotes from the website, that oftenly used, while learning Web Scraping with Python, let’s see.

import requests

from bs4 import BeautifulSoup

def scrape_quotes():

page = 1

while True:

# This URL will not change with page number, causing the bug.

url = "https://quotes.toscrape.com/page/1/"

response = requests.get(url)

if response.ok:

soup = BeautifulSoup(response.text, 'html.parser')

quotes = soup.find_all('span', class_='text')

if quotes:

for quote in quotes:

print(quote.text)

else:

# This condition will never be true since 'quotes' will not be empty.

print("No more quotes to scrape.")

break

# The page variable is incremented, but not used in the URL.

page += 1

else:

print(f"Failed to retrieve the webpage, status code: {response.status_code}")

break

scrape_quotes()

Can you detect the error in the code? If not, that’s okay; I guess apart from expertes, there are very few people who can do that.

The error was a classic case of a loop that never reaches its end condition, so be careful before running or make ready to stop it manually.

This error meant that although our page variable increased, our script kept requesting the same page, trapping us in an infinite loop.

Here, all you need to do is using following prompt to debug your code.

And voila, it will debug your code chunk instantly and provide you the latest version.

import requests

from bs4 import BeautifulSoup

def scrape_quotes():

page = 1

while True:

url = f"https://quotes.toscrape.com/page/{page}/" # Now the URL updates with the page number.

response = requests.get(url)

if response.ok:

soup = BeautifulSoup(response.text, 'html.parser')

quotes = soup.find_all('span', class_='text')

if quotes:

for quote in quotes:

print(quote.text)

else:

print("No more quotes to scrape.")

break

page += 1 # Increment the page number.

else:

print(f"Failed to retrieve the webpage, status code: {response.status_code}")

break

scrape_quotes()

The output will contain the all quotes from the website.

Improve Your Code

Let’s say you’ll scrape quotes from the website above.

Here is the code.

import requests

from bs4 import BeautifulSoup

import time

def scrape_quotes_longer():

page = 1

all_quotes = []

while True:

try:

response = requests.get(f"https://quotes.toscrape.com/page/{page}/", timeout=5)

if not response.ok:

break

soup = BeautifulSoup(response.text, 'html.parser')

quotes = soup.find_all('span', class_='text')

for quote in quotes:

all_quotes.append(quote.text)

# Naive check for the 'next' button

next_btn = soup.select_one('li.next > a')

if not next_btn:

break

page += 1

time.sleep(2) # Sleep for 2 seconds between requests

except requests.RequestException as e:

print(f"Request failed: {e}")

break

return all_quotes

quotes = scrape_quotes_longer()

for q in quotes:

print(q)

The output will be the same we had before, all quotes will be there, but let’s say, you are not satisfied with the code and you want to improve your code.

You can use following prompt, it is simple but powerful.

Now let’s see the new code that ChatGPT offers.

import requests

from bs4 import BeautifulSoup

from requests.exceptions import RequestException

from time import sleep

def scrape_quotes_improved():

page = 1

all_quotes = []

headers = {'User-Agent': 'Mozilla/5.0'}

while True:

url = f"https://quotes.toscrape.com/page/{page}/"

try:

response = requests.get(url, headers=headers, timeout=5)

response.raise_for_status() # Will raise an HTTPError if the HTTP request returned an unsuccessful status code

soup = BeautifulSoup(response.text, 'html.parser')

quotes = [quote.text for quote in soup.find_all('span', class_='text')]

if not quotes:

break

all_quotes.extend(quotes)

next_btn = soup.select_one('li.next > a')

if not next_btn:

break # No more pages

page += 1

sleep(1) # More reasonable delay

except RequestException as e:

print(f"Request failed: {e}")

break

return all_quotes

quotes = scrape_quotes_improved()

for q in quotes:

print(q)

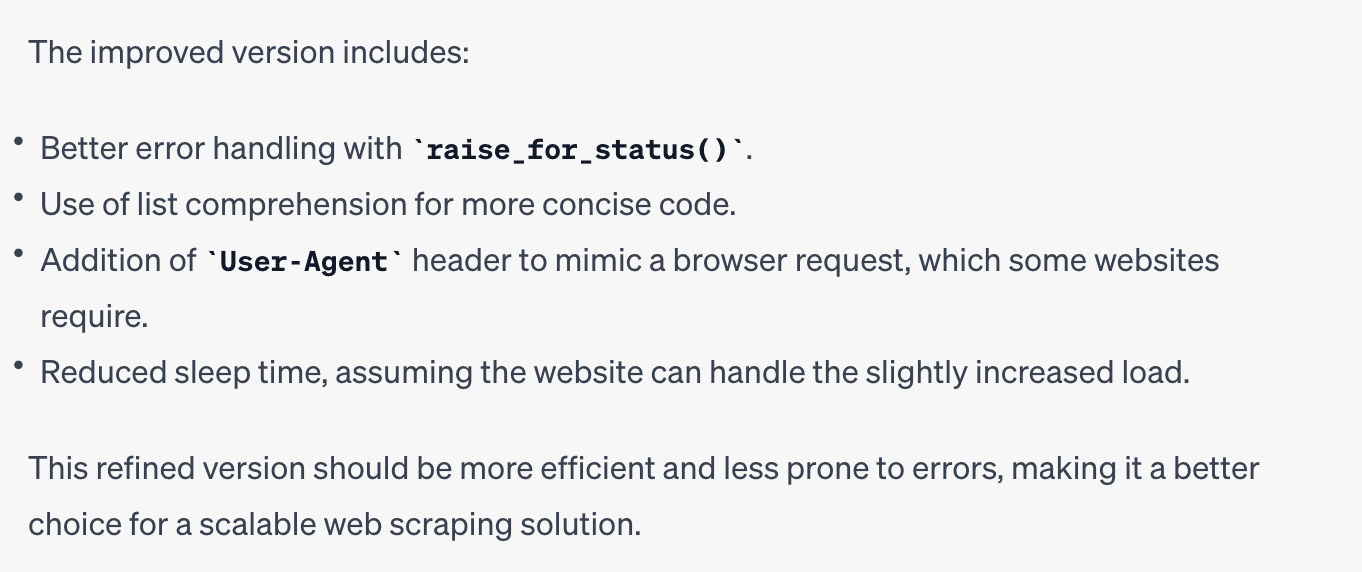

The output will be the same, but here is the improvements that ChatGPT made.

As you can see it is really powerful.

Image created by the author using DALL-E 3

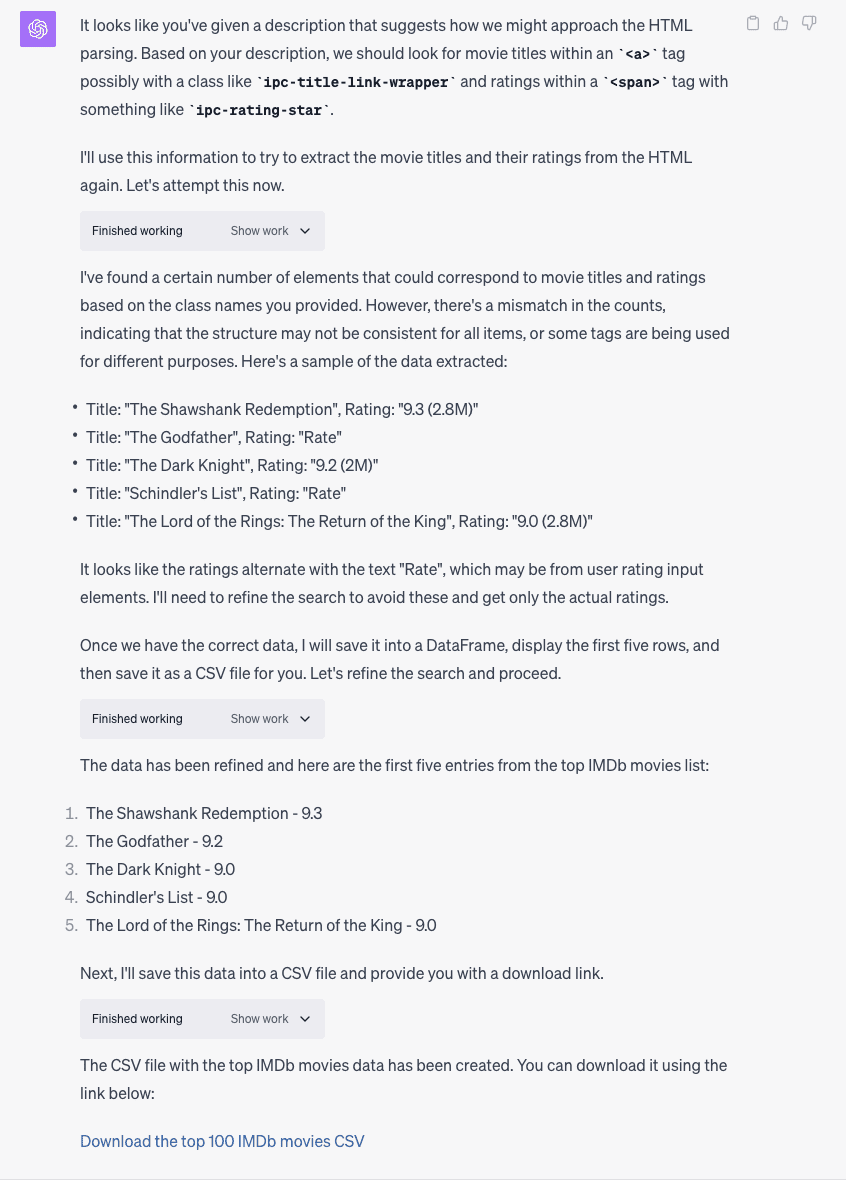

Here, you can try to automate the whole web scraping process, by downloading HTML file from the webpage you want to scrape, and send the HTML document to the ChatGPT Advanced Data Analysis, by adding file to it.

Let’s see from the example. Here is the IMDB website, that contains top 100 rating movies, according to IMDB user ratings, but at the end of this webpage, don’t forget to click 50 more, to allow this web page, that will show all 100 together.

After that, let’s download the html file by right clicking on the web page and then click on save as, and select html file. Now you got the file, open ChatGPT and select the advanced data analysis.

Now it is time to add the file you downloaded HTML file at first. After adding file, use the prompt below.

Save the top 100 IMDb movies, including the movie name, IMDb rating, Then, display the first five rows. Additionally, save this dataframe as a CSV file and send it to me.

But here, if the web pages structure is a bit complicated, ChatGPT might not understand the structure of your website fully. Here I advise you to use new feature of it, sending pictures. You can send the screenshot of the web page you want to collect information.

To use this feature, click right on the web page and click inspect, here you can see the html elements.

Send this pages screenshot to the ChatGPT and ask more information about this web pages elements. Once you got these more information ChatGPT needs, turn back to your previous conversation and send those information to the ChatGPT again. And voila!

We discover Web Scraping through ChatGPT. Planning, debugging, and refining code alongside AI proved not just productive but illuminating. It’s a dialogue with technology leading us to new insights.

As you already know, Data Science like Web Scraping demands practice. It’s like crafting. Code, correct, and code again—that’s the mantra for budding data scientists aiming to make their mark.

Ready for hands-on experience? StrataScratch platform is your arena. Go into data projects and crackinterview questions, and join a community which will help you grow. See you there!

Nate Rosidi is a data scientist and in product strategy. He’s also an adjunct professor teaching analytics, and is the founder of StrataScratch, a platform helping data scientists prepare for their interviews with real interview questions from top companies. Connect with him on Twitter: StrataScratch or LinkedIn.

[ad_2]

Source link