[ad_1]

Natural picture production is now on par with professional photography, thanks to a notable recent improvement in quality. This advancement is attributable to creating technologies like DALL·E3, SDXL, and Imagen. Key elements driving these developments are using the potent Large Language Model (LLM) as a text encoder, scaling up training datasets, increasing model complexity, better sampling strategy design, and improving data quality. The research team feels that now is the right time to focus on developing a more professional image, especially in graphic design, given its crucial functions in branding, marketing, and advertising.

As a professional field, graphic design uses the power of visual communication to communicate clearly defined messages to certain social groups. It’s a field that demands imagination, ingenuity, and quick thinking. In graphic design, text and visuals are typically combined using digital or manual methods to create visually engaging stories. Its main objective is to organize data, provide meaning to concepts, and provide expression and emotion to objects that document human experiences. The creative use of typeface, text arrangement, ornamentation, and images in graphic design frequently allows ideas, feelings, and attitudes that cannot be expressed by words alone. Producing top-notch designs requires high imagination, ingenuity, and lateral thinking.

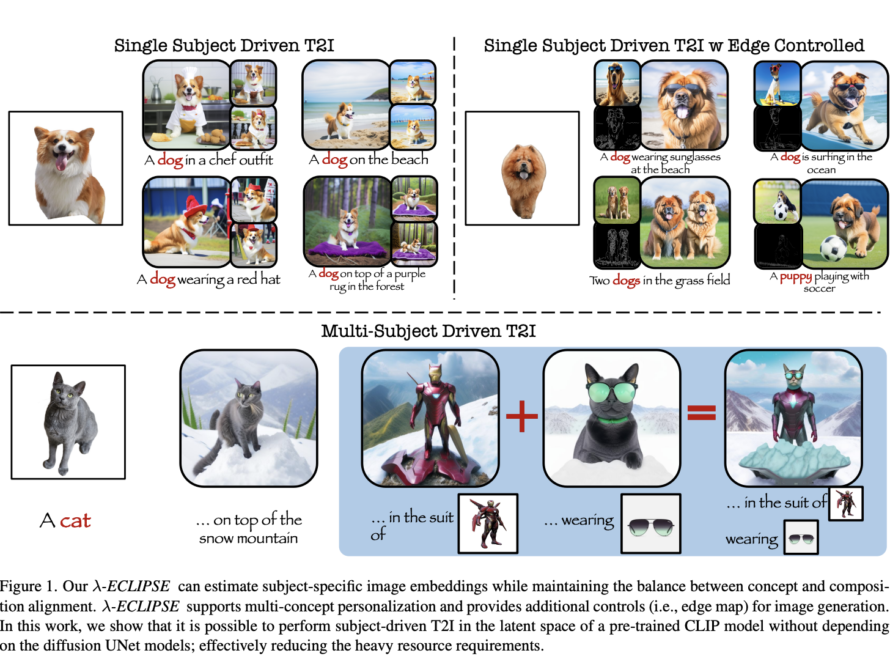

According to the current study, the ground-breaking DALL·E3 has remarkable skills in producing high-quality design pictures, distinguished by visually arresting layouts and graphics, as seen in Figure 1. These pictures do not, however, come without shortcomings. Their ongoing struggles include misrendered visual text, which frequently leaves off or adds additional characters (a condition also noted in ). Moreover, because these created pictures are essentially uneditable, modifying them requires intricate procedures like segmentation, erasing, and inpainting. The requirement that users supply comprehensive text prompts is another significant constraint. Creating good prompts for visual design production usually requires a high level of professional skill.

As Figure 2 illustrates, unlike DALL·E3, their COLE system can produce excellent quality graphic design graphics with only a basic requirement for user purposes. According to the research team, these three restrictions seriously impair the quality of graphic design pictures. A high-quality, scalable visual design generating system should ideally give a flexible editing area, generate accurate and high-quality typographic information for various uses, and demand low effort from users. Users could use human skills as needed to enhance the result further. This effort aims to establish a stable and effective autonomous text-to-design system that can produce excellent graphic design pictures from user intent prompts.

The research team from Microsoft Research Asia and Peking University propose COLE, a hierarchical generating approach to simplify the intricate process of creating graphic design images. Several specialized generation models, each intended to tackle a distinct sub-task, are involved in this process.

First and foremost, the emphasis is on imaginative design and interpretation, primarily on comprehending intentions. This is achieved by using cutting-edge LLMs, namely the Llama2-13B, and optimizing it using a large dataset of almost 100,000 curated intention-JSON pairings. Important design-related information, including textual descriptions, item captions, and backdrop captions, are included in the JSON file. The research team also offers optional parameters for additional purposes, such as object location.

Second, they focus on the arrangement and improvement of visuals, which includes two subtasks: the production of visual components and typographic features. Creating various visual features entails fine-tuning specialized cascaded diffusion models such as DeepFloyd/IF. These models are built in a way that guarantees a smooth transition between components, such as the layered object images and the adorned backdrop. The research team then predicts the typography JSON file using a typography Large Multimodal Model (LMM) constructed using LLaVA-1.5-13B. This uses the predicted JSON file from the Design LLM, the projected backdrop picture from a diffusion model, and the expected object image from a cascaded diffusion model. A visual renderer then assembles these components using the layout found in the anticipated JSON file.

Third, quality assurance and comments are provided at the end of the process to improve the overall quality of the design. A reflection LMM must be painstakingly adjusted, and GPT-4V(ision) must be used for a comprehensive, multifaceted quality examination. This last stage makes tweaking the JSON file easier as needed, including changing the text box’s sizes and positions. Finally, the research team built a DESIGNERINTENTION, comprising approximately 200 professional graphic design intention prompts spanning various categories and about 20 creative ones, to assess the system’s capabilities. They then compared their approach to the state-of-the-art image generation system currently in use, carried out exhaustive ablation experiments for each generation model on various sub-tasks, provided a thorough analysis of the graphic designs produced by their system, and had a conversation about the drawbacks and potential future directions of graphic design image generation.

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 33k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

Aneesh Tickoo is a consulting intern at MarktechPost. He is currently pursuing his undergraduate degree in Data Science and Artificial Intelligence from the Indian Institute of Technology(IIT), Bhilai. He spends most of his time working on projects aimed at harnessing the power of machine learning. His research interest is image processing and is passionate about building solutions around it. He loves to connect with people and collaborate on interesting projects.

[ad_2]

Source link