[ad_1]

The researchers from The University of Hong Kong, Alibaba Group, and Ant Group developed LivePhoto to solve the issue of temporal motions being overlooked in current text-to-video generation studies. LivePhoto enables users to animate images with text descriptions while reducing ambiguity in text-to-motion mapping.

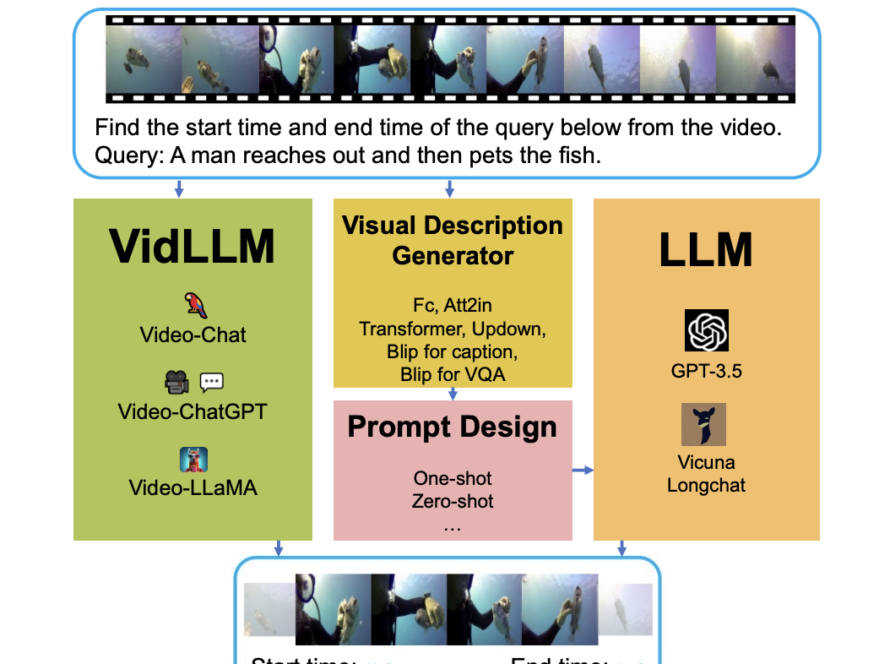

The study addresses limitations in existing image animation methods by presenting LivePhoto, a practical system enabling users to animate images with text descriptions. Unlike previous works relying on videos or specific categories, LivePhoto uses text as a flexible control for generating customized videos across universal domains. The field of text-to-video generation has evolved, with recent approaches leveraging pre-trained text-to-image models and introducing temporal layers. LivePhoto overcomes challenges by allowing users to control motion intensity through text, providing a versatile and customizable framework for text-driven image animation across various domains.

LivePhoto is a system that allows users to animate images with text descriptions. With LivePhoto, users have precise control over motion intensity, making it easy to decode motion-related textual instructions into videos. This highly flexible and customizable system allows users to generate diverse content from textual instructions. LivePhoto is a valuable contribution to text-driven image animation.

The system incorporates a motion module, motion intensity estimation module, and text re-weighting module for effective text-to-motion mapping, addressing challenges in text-to-video generation. Utilizing the Stable Diffusion model introduces additional modules and layers for motion control and text-guided video generation. LivePhoto employs content encoding, cross-attention, and noise inversion for guidance, facilitating the creation of customized videos based on textual instructions while preserving global identity.

LivePhoto excels in decoding motion-related textual instructions into videos, showcasing its ability to control temporal motions with text descriptions. LivePhoto gives users an additional control signal for customizing motion intensity, offering flexibility in animating images with text descriptions. The system utilizes Stable Diffusion as its base model, enhanced with modules and layers to enable effective text-to-video generation and motion control.

In conclusion, LivePhoto is a practical and flexible system that enables users to create animated images with customizable motion control and text descriptions. Its motion module for temporal modeling and intensity estimation decodes textual instructions into diverse videos, making it effective across different actions, camera movements, and contents. Its widespread applications make it a useful tool for creating animated images based on text instructions.

To enhance LivePhoto, exploring higher resolutions and robust models like SD-XL may significantly improve overall performance. Addressing the issue of motion speed and magnitude description in text can improve coherent alignment with motion. Utilizing super-resolution networks as post-processing may enhance video smoothness and resolution. Improving training data quality could enhance image consistency in generated videos. Future work could refine the training pipeline and optimize the motion intensity estimation module. Investigating LivePhoto’s potential across diverse applications and domains is a promising avenue for future research.

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 33k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

Hello, My name is Adnan Hassan. I am a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a dual degree at the Indian Institute of Technology, Kharagpur. I am passionate about technology and want to create new products that make a difference.

[ad_2]

Source link