[ad_1]

Generative foundational models are a class of artificial intelligence models designed to generate new data that resembles a specific type of input data they were trained on. These models are often employed in various fields, including natural language processing, computer vision, music generation, etc. They learn the underlying patterns and structures from the training data and use that knowledge to generate new, similar data.

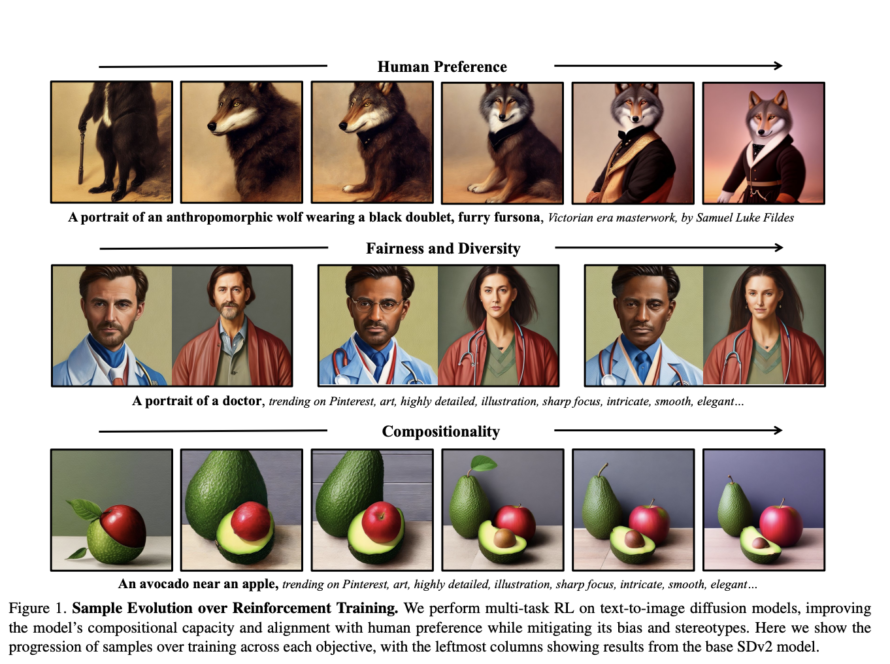

Generative foundational models have diverse applications, including image synthesis, text generation, recommendation systems, drug discovery, and more. They are continually evolving, with researchers working on improving their generation capabilities, such as generating more diverse and high-quality outputs, enhancing controllability, and understanding the ethical implications associated with their use.

Researchers at Stanford University, Northeastern University, and Salesforce AI research built UniControl. It is a unified diffusion model for controllable visual generation in the wild capable of simultaneously handling language and various visual conditions.UniControl can perform multi-tasking and encode visual conditions from different tasks into a universal representation space, seeking a common structure among tasks. UniControl is required to take a wide range of visual conditions from other tasks and the language prompt.

UniControl offers image creation with pixel-perfect precision, where the visual elements chiefly shape the resulting images, and language prompts direct the style and context. To enhance UniControl’s ability to manage various visual scenarios, the research team has expanded pre-trained text-to-image diffusion models. Additionally, they have incorporated a task-aware HyperNet that adjusts the diffusion models, allowing them to adapt to multiple image generation tasks based on different visual conditions concurrently.

Their model demonstrates a more subtle understanding of 3D geometrical guidance of depth maps and surface normals than ControlNet. The depth map conditions produce visibly more accurate outputs. During the segmentation, openpose, and object bounding box tasks, the produced images generated by their model are better aligned with the given conditions than those by ControlNet, ensuring a higher fidelity to the input prompts. Experimental results show that UniControl often surpasses the performance of single-task-controlled methods of comparable model sizes.

UniControl unifies various visual conditions of ControlNet and is capable of performing zero-shot learning on newly unseen tasks. Presently, UniControl takes only a single visual condition while still capable of both multi-tasking and zero-shot learning. This highlights its versatility and potential for widespread adoption in the wild.

However, their model still inherits the limitation of diffusion-based image generation models. Specifically, it is limited by the researchers’ training data, which was obtained from a subset of the Laion-Aesthetics datasets. Their data set is data-biased. UniControl could be improved if better open-source datasets are available to block the creation of biased, toxic, sexualized, or other harmful content.

Check out the Paper, GitHub, and Project Page. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 33k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

Arshad is an intern at MarktechPost. He is currently pursuing his Int. MSc Physics from the Indian Institute of Technology Kharagpur. Understanding things to the fundamental level leads to new discoveries which lead to advancement in technology. He is passionate about understanding the nature fundamentally with the help of tools like mathematical models, ML models and AI.

[ad_2]

Source link