[ad_1]

In the previous two articles we saw how we can implement a basic classifier based on Rosenblatt’s perceptron and how this classifier can be improved by using the adaptive linear neuron algorithm (adaline). These two articles cover the foundations before attempting to implement an artificial neural network with many layers. Moving from adaline to deep learning is a bigger leap and many machine learning practitioners will opt directly for an open source library like PyTorch. Using such a specialised machine learning library is of course recommended for developing a model in production, but not necessarily for learning the fundamental concepts of multilayer neural networks. This article builds a multilayer neural network from scratch. Instead of solving a binary classification problem we will focus on a multiclass one. We will be using the sigmoid activation function after each layer, including the output one. Essentially we train a model that for each input, comprising a vector of features, produces a vector with length equal to the number of classes to be predicted. Each element of the output vector is in the range [0, 1] and can be understood as the “probability” of each class.

The purpose of the article is to become comfortable with the mathematical notation used for describing mathematically neural networks, understand the role of the various matrices with weights and biases, and derive the formulas for updating the weights and biases to minimise the loss function. The implementation allows for any number of hidden layers with arbitrary dimensions. Most tutorials assume a fixed architecture but this article uses a carefully chosen mathematical notation that supports generalisation. In this way we can also run simple numerical experiments to examine the predictive performance as a function of the number and size of the hidden layers.

As in the earlier articles, I used the online LaTeX equation editor to develop the LaTeX code for the equation and then the chrome plugin Maths Equations Anywhere to render the equation into an image. All LaTex code is provided at the end of the article if you need to render it again. Getting the notation right is part of the journey in machine learning, and essential for understanding neural networks. It is vital to scrutinise the formulas, and pay attention to the various indices and the rules for matrix multiplication. Implementation in code becomes trivial once the model is correctly formulated on paper.

All code used in the article can be found in the accompanying repository. The article covers the following topics

∘ What is a multilayer neural network?

∘ Activation

∘ Loss function

∘ Backpropagation

∘ Implementation

∘ Dataset

∘ Training the model

∘ Hyperparameter tuning

∘ Conclusions

∘ LaTeX code of equations used in the article

What is a multilayer neural network?

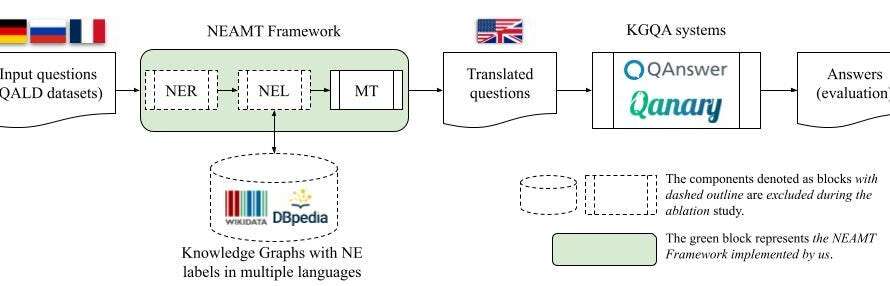

This section introduces the architecture of a generalised, feedforward, fully-connected multilayer neural network. There are a lot of terms to go through here as we work our way through Figure 1 below.

For every prediction, the network accepts a vector of features as input

that can also be understood as a matrix with shape (1, n⁰). The network uses L layers and produces a vector as an output

that can be understood as a matrix with shape (1, nᴸ) where nᴸ is the number of classes in the multiclass classification problem we need to solve. Every float in this matrix lies in the range [0, 1] and the index of the largest element corresponds to the predicted class. The (L) notation in the superscript is used to refer to a particular layer, in this case the last one.

But how do we generate this prediction? Let’s focus on the first element of the first layer (the input is not considered a layer)

We first compute the net input that is essentially an inner product of the input vector with a set of weights with the addition of a bias term. The second operation is the application of the activation function σ(z) to which we will return later. For now it is important to keep in mind that the activation function is essentially a scalar operation.

We can compute all elements of the first layer in the same way

From the above we can deduce that we have introduced n¹ x n⁰ weights and n¹ bias terms that will need to be fitted when the model is trained. These calculations can also be expressed in matrix form

Pay close attention to the shape of the matrices. The net output is a result of a matrix multiplication of two matrices with shape (1, n⁰) and (n⁰, n¹) that results in a matrix with shape (1, n¹), to which we add another matrix with the bias terms that has the same (1, n¹) shape. Note that we introduced the transpose of the weight matrix. The activation function applies to every element of this matrix and hence the activated values of layer 1 are also a matrix with shape (1, n¹).

The above can be readily generalised for every layer in the neural network. Layer k accepts as input nᵏ⁻¹ values and produces nᵏ activated values

Layer k introduces nᵏ x nᵏ⁻¹ weights and nᵏ bias terms that will need to be fitted when the model is trained. The total number of weights and bias terms is

so if we assume an input vector with 784 elements (dimension of a low resolution image in gray scale), a single hidden layer with 50 nodes and 10 classes in the output we need to optimise 785*50+51*10 = 39,760 parameters. The number of parameters grows further if we increase the number of hidden layers and the number of nodes in these layers. Optimising an objective function with so many parameters is not a trivial undertaking and this is why it took some time from the time adaline was introduced until we discovered how to train deep networks in the mid 80s.

This section essentially covers what is known as the forward pass, i.e. how we apply a series of matrix multiplications, matrix additions and element wise activations to convert the input vector to an output vector. If you pay close attention we assumed that the input was a single sample represented as a matrix with shape (1, n⁰). The notation holds even if we we feed into the network a batch of samples represented as a matrix with shape (N, n⁰). There is only small complexity when it comes to the bias terms. If we focus on the first layer we sum a matrix with shape (N, n¹) to a bias matrix with shape (1, n¹). For this to work the bias matrix has its first row replicated as many times as the number of samples in the batch we use in the forward pass. This is such a natural operation that NumPy does it automatically in what is called broadcasting. When we apply forward pass to a batch of inputs it is perhaps cleaner to use capital letters for all vectors that become matrices, i.e.

Note that I assumed that broadcasting was applied to the bias terms leading to a matrix with as many rows as the number of samples in the batch.

Operating with batches is typical with deep neural networks. We can see that as the number of samples N increases we will need more memory to store the various matrices and carry out the matrix multiplications. In addition, using only part of training set for updating the weights means we will be updating the parameters several times in each pass of the training set (epoch) leading to faster convergence. There is an additional benefit that is perhaps less obvious. The network uses activation functions that, unlike the activation in adaline, are not the identity. In fact they are not even linear, which makes the loss function non convex. Using batches introduces noise that is believed to help escaping shallow local minima. A suitably chosen learning rate further assists with this.

As a final note before we move on, the term feedforward comes from the fact that each layer is using as input the output of the previous layer without using loops that lead to the so-called recurrent neural networks.

Activation

Enabling the neural network to solve complex problem requires introducing some form of nonlinearity. This is achieved by using an activation function in each layer. There are many choices. For this article we will be using the sigmoid (logistic) activation function that we can visualise with

that produces

The code also includes all imports we will need throughout the article.

The activation function maps any float to the range 0 to 1. In reality the sigmoid is a more suitable activation of the final layer for binary classification problems. For multiclass problems it would have been more appropriate to use softmax to normalize the output of the neural network to a probability distribution over predicted output classes. One way to think about this is that softmax enforces that post activation the sum of the entries of the output vector must add up to 1, that is not the case with sigmoid. Another way to think about it is that the sigmoid essentially converts the logits (log odds) to a one-versus-all (OvA) probability. Still, we will use the sigmoid activation function to stay as close as possible to adaline because the softmax is not an element wise operation and this will introduce some complexities in the back propagation algorithm. I leave this as an exercise for the reader.

Loss function

The loss function used for adaline was the mean square error. In practice a multiclass classification problem would use a multiclass cross-entropy loss. In order to remain as close to adaline as possible, and to facilitate the analytical calculation of the gradients of the loss function with respect to the parameters, we will stick on the mean square error loss function. Every sample in the training set, belongs to one of the nᴸ classes and hence the loss function can be expressed as

where the first summation is over all samples and the second over classes. The above implies that the known class for sample i has been converted to a one-hot-encoding, i.e. a matrix with shape (1, nᴸ) containing zeros apart from the element that corresponds to the sample class that is one. We adopted one more notation convention so that [j] in the superscript is used to refer to sample j. The summation above does not need to use all samples in the training set. In practice it will be applied in batches of N’ samples with N’<<N.

Backpropagation

The loss function is a scalar that depends on tens or hundreds of thousands of parameters, comprising weights and bias terms. Typically, these parameters are initialised with random numbers and are updated iteratively so that the loss function is minimised using the gradient of the loss function with regard to each parameter. In the case of adaline, the analytical derivation of the gradients was straightforward. For multilayer neural networks the derivation is more involved but remains tractable if we adopt a clever strategy. We enter the world of the back propagation but fear not. Backpropagation essentially boils down to a successive application of the chain differentiation rule from the right to the left.

Let’s come back to the loss function. It depends on the activated values of the last layer, so we can first compute the derivatives with regard to those

The above can be understood as the (j, i) element of a derivate matrix with shape (N, nᴸ) and can be written in matrix form as

where both matrices in the right hand side have shape (N, nᴸ). The activated values of the last layer are computed by applying the sigmoid activation function on each element of the net input matrix of the last layer. Hence, to compute the derivatives of the loss function with regard to each element of this net input matrix of the last layer we simply need to remind ourselves on how to compute the derivative of a nested function with the outer one being the sigmoid function:

The star multiplication denotes element wise multiplication. The result of this formula is a matrix with shape (N, nᴸ). If you have difficulties computing the derivative of the sigmoid function please check here.

We are now ready to compute the derivative of the loss function with regard to the weights of the L-1 layer; this is the first set of weights we encounter when we move from right to left

This leads to a matrix with the same shape as the weights of the L-1 layer. We next need to compute the derivative of the net input of the L layer with regard to the weights of the L-1 layer. If we pick one element of the net input matrix of the last layer and one of these weights we have

If you have trouble to understand the above, think that for every sample j, the i element of the net input of the L layer only depends on the weights of the L-1 layer for which the first index is also i. Hence, we can eliminate one of the summations in the derivative

We can express all these derivatives in a matrix notation using

Essentially the implicit summation in the matrix multiplication absorbs the summation over the samples. Follow along with the shapes of the multiplied matrices and you will see that the resulting derivative matrix has the same shape as the weight matrix used to calculate the net input of the L layer. Although the number of elements in the resulting matrix is limited to the product of the number of nodes of the last two layers (the shape is (nᴸ, nᴸ⁻¹)), the multiplied matrices are much larger and hence are typically more memory consuming. Hence, the need to use batches when training the model.

The derivatives of the loss function with respect to the bias terms used for calculating the net input of the last layer can be computed similarly as for the weights to give

that leads to a matrix with shape (1, nᴸ).

We have just computed all derivatives of the loss function with regard to the weights and bias terms used for computing the net input of the last layer. We now turn our attention to the gradients with the regard to the weights and bias terms of the previous layer (these parameters will have the superscript index L-2). Hopefully we can start identifying patterns so that we can apply them to compute the derivates with regard to the weights and bias terms for k=0,..,L-2. We could see these patterns emerge if we compute the derivative of the loss function with regard to the activated values of the L-1 layer. These should form a matrix with shape (N, nᴸ⁻¹) that is computed as

Once we have the derivatives of the loss with regard to the activated values of layer L-1 we can proceed with calculating the derivatives of the loss function with regard to the net input of the layer L-1 and then with regard to the weights and bias terms with index L-2.

Let’s recap how we backpropagate by one layer. We assume we have computed the derivative of the loss function with regard to the weights and bias terms with index k and we need to compute the derivates of the loss function with regard to the weights and bias terms with index k-1. We need to carry out 4 operations:

All operations are vectorised. We can already start imaging how we could implement these operations in a class. My understanding is that when one uses a specialised library to add a fully connected linear layer with an activation function, this is what happens behind the scenes! It is nice not to worry about the mathematical notation, but my suggestion would be to go through these derivations at least once.

Implementation

In this section we provide the implementation of a generalised, feedforward, multilayer neural network. The API draws some analogies to the one found in specialised deep learning libraries such as PyTorch

The code contains two utility functions: sigmoid() applies the sigmoid (logistic) activation function to a float (or NumPy array), and int_to_onehot() takes a list of integers with the class of each sample and returns their one-hot-encoding representation.

The class MultilayerNeuralNetClassifier contains the neural net implementation. The initialisation constructor assigns random numbers to the weights and bias terms of each layer. As an example if we construct a neural network with layers=[784, 50, 10], we will be using 784 input features, a hidden layer with 50 nodes and 10 classes as output. This generalised implementation allows changing both the number of hidden layers and the number of nodes in the hidden layers. We will exploit this when we do hyperparameter tuning later on. For reproducibility we use a seed for the random number generator to initialise the weights.

The forward method returns the activated values for each layer as a list of matrices. The method works with a single sample or an array of samples. The last of the returned matrices contains the model predictions for the class membership of each sample. Once the model is trained only this matrix is used for making predictions. However, whilst the model is being trained we need the activated values for all layers as we will see below and this is why the forward method returns all of them. Assuming that the network was initialised with layers=[784, 50, 10], the forward method will return a list of two matrices, the first one with shape (N, 50) and the second one with shape (N, 10), assuming the input x has N samples, i.e. it is a matrix with shape (N, 784).

The backward method implements backpropagation, i.e. all the analytically computed derivatives of the loss function as described in the previous section. The last layer is special because we need to compute the derivatives of the loss function with regard to the model output using the known classes. The first layer is special because we need to use the input instead of the activated values of the previous layer. The middle layers are all the same. We simply iterate over the layers backwards. The code reflects fully the analytically derived formulas. By using NumPy we vectorise all operations that speeds up execution. The method returns a tuple of two lists. The first list contains the matrices with the derivatives of the loss function with regard to the weights of each layer. Assuming that the network was initialised with layers=[784, 50, 10], the list will contain two matrices with shapes (784, 50) and (50, 10). The second list contains the vectors with the derivatives of the loss function with regard to the bias terms of each layer. Assuming that the network was initialised with layers=[784, 50, 10], the list will contain two vectors with shapes (50, ) and (10,).

Reflecting back on my learnings from this article, I felt that the implementation was straightforward. The hardest part was to come up with a robust mathematical notation and work out the gradients on paper. Still, it is easy to make mistakes that may not be easy to detect even if the optimisation seems to converge. This brings me to the special backward_numerical method. This method is used for neither training the model nor making predictions. It uses finite (central) differences to estimate the derivatives of the loss function with regard to the weights and bias terms of the chosen layer. The numerical derivatives can be compared with the analytically computed ones returned by the backward function to ensure that the implementation is correct. This method would be too slow to be used for training the model as it requires two forward passes for each derivative and in our trivial example with layers=[784, 50, 10] there are 39,760 such derivatives! But it is a lifesaver. Personally I would not have managed to debug the code without it. If you want to keep a key message from this article, it would be the usefulness of numerical differentiation for double checking your analytically derived gradients. We can check the correctness of the gradients with an untrained model

that produces

layer 3: 300 out of 300 weight gradients are numerically equal

layer 3:10 out of 10 bias term gradients are numerically equal

layer 2: 1200 out of 1200 weight gradients are numerically equal

layer 2:30 out of 30 bias term gradients are numerically equal

layer 1: 2000 out of 2000 weight gradients are numerically equal

layer 1:40 out of 40 bias term gradients are numerically equal

Gradients look in order!

Dataset

We will need a dataset for building our first model. A famous one often used in pattern recognition experiments is the MNIST handwritten digits. We can find more details about this dataset in the OpenML dataset repository. All datasets in OpenML are subject to the CC BY 4.0 license that permits copying, redistributing and transforming the material in any medium and for any purpose.

The dataset contains 70,000 digit images and the corresponding labels. Conveniently, the digits have been size-normalized and centered in a fixed-size 28×28 image by computing the center of mass of the pixels, and translating the image so as to position this point at the center of the 28×28 field. The dataset can be conveniently retrieved using scikit-learn

that prints

original X: X.shape=(70000, 784), X.dtype=dtype('int64'), X.min()=0, X.max()=255

original y: y.shape=(70000,), y.dtype=dtype('O')

processed X: X.shape=(70000, 784), X.dtype=dtype('float64'), X.min()=-1.0, X.max()=1.0

processed y: y.shape=(70000,), y.dtype=dtype('int32')

class counts: 0:6903, 1:7877, 2:6990, 3:7141, 4:6824, 5:6313, 6:6876, 7:7293, 8:6825, 9:6958

We can see that each image is available as a vector with 784 integers between 0 and 255 that were converted to floats in the range [-0.5, 0.5]. This is perhaps a bit different than the typical feature scaling in scikit-learn where scaling happens per feature rather per sample. The class labels were retrieved as strings and converted to integers. The dataset is reasonably balanced.

We next visualise ten images for each digit to obtain a feeling on the variations in hand writing

that produces

We can foresee that some digits may be confused by the model, e.g. the last 9 resembles 8. There may also be hand writing variations that are not predicted well, such as 7 digits written with a horizontal line in the middle, depending on how often such variations are represented in the training set. We now have a neural network implementation and a dataset to use it with. In the next section we will provide the necessary code for training the model before we look into hyperparameter tuning.

Training the model

The first action we need to take is to split the dataset into a training set, and an external (hold-out) test set. We can readily do so using scikit-learn

We use stratification so that the percentage of each class is roughly equal in both the training set and the external (hold-out) dataset. The external (hold-out) test set contains 10,000 samples and will not be used for anything other than assessing the model performance. In this section we will use the 60,000 samples for training set without any hyperparameter tuning.

When deriving the gradients of the loss function with regard to the model parameters we show that it is necessary to carry out several matrix multiplications and some of these matrices have as many rows as the number of samples. Given that typically the number of samples is quite large we will need a significant amount of memory. To alleviate this we will be using mini batches in the same way we used mini batches during the gradient descent optimisation of the adaline model. Typically, each batch can contain 100–500 samples. Reducing the batch size increases the convergence speed because we make more parameter updates within the the same pass of the training set (epoch), but we also increase the noise. We need to strike a balance. First we provide a generator that accepts the training set and the batch size and returns the batches

The generator returns batches of equal size that by default contain 100 samples. The total number of samples may not be a multiple of the batch size and hence some samples will not be returned in a given pass through the training set. Th number of skipped samples is smaller than the batch size and the set of samples left out changes every time the generator is used, assuming we do not reset the random number generator. Hence, this is not critical. As we will be passing though the training sets multiple times in the different epochs we will eventually use the training set fully. The reason for using batches of a constant size is that we will be updating the model parameters after each batch and a small batch can increase the noise and prevent convergence, especially if the samples in the batch happen to be outliers.

When the model is initiated we expect a low accuracy that we can confirm with

that gives an accuracy of approximately 9.5%. This is more or less expected for a reasonably balanced dataset as there are 10 classes. We now have the means to monitor the loss and the accuracy of each batch passed to the forward pass that we will exploit during training. Let’s write the final piece of code to iterate over the epochs and mini batches, update the model parameters and monitor how the loss and accuracy evolves in both the training set and external (hold-out) test set.

Using this function training becomes a single line of code

that produces

epoch 0: loss_training=0.096 | accuracy_training=0.236 | loss_test=0.088 | accuracy_test=0.285

epoch 1: loss_training=0.086 | accuracy_training=0.333 | loss_test=0.085 | accuracy_test=0.367

epoch 2: loss_training=0.083 | accuracy_training=0.430 | loss_test=0.081 | accuracy_test=0.479

epoch 3: loss_training=0.078 | accuracy_training=0.532 | loss_test=0.075 | accuracy_test=0.568

epoch 4: loss_training=0.072 | accuracy_training=0.609 | loss_test=0.069 | accuracy_test=0.629

epoch 5: loss_training=0.066 | accuracy_training=0.657 | loss_test=0.063 | accuracy_test=0.673

epoch 6: loss_training=0.060 | accuracy_training=0.691 | loss_test=0.057 | accuracy_test=0.701

epoch 7: loss_training=0.055 | accuracy_training=0.717 | loss_test=0.052 | accuracy_test=0.725

epoch 8: loss_training=0.050 | accuracy_training=0.739 | loss_test=0.049 | accuracy_test=0.742

epoch 9: loss_training=0.047 | accuracy_training=0.759 | loss_test=0.045 | accuracy_test=0.765

We can see that after the ten epochs the accuracy for the training set has reached approximately 76%, whilst the accuracy of the external (hold-out) test set is slightly higher, indicating that the model has not been overfitted.

The loss of the training set keeps decreasing and hence convergence has not been reached yet. The model allows hot starting so we could run another ten epochs by repeating the single line of code above. Instead, we will initiate the model again and run it for 100 epochs, increasing the batch size to 200 at the same time. We provide the complete code for doing so.

We first plot the training loss and its rate of change as a function of the epoch number

that produces

We can see the model has converged reasonably well as the rate of the change of the training loss has become more than two orders of magnitude smaller compared to its value at the beginning of the training. I am not sure why we observe a reduction in convergence speed at around epoch 10; I can only speculate that the optimiser escaped a local minimum.

We can also plot the accuracy of the training set and the test set as a function of the epoch number

that produces

The accuracy reaches approximately 90% after about 50 epochs for both the training set and external (hold-out) test set, suggesting that there is no/little overfitting. We just trained our first, custom built multilayer neural network with one hidden layer!

Hyperparameter tuning

In this previous section we chose an arbitrary network architecture and fitted the model parameters. In this section we proceed with a basic hyperparameter tuning by varying the number of hidden layers (ranging from 1 to 3), the number of nodes in the hidden layers (ranging from 10 to 50 in increments of 10) and the learning rate (using the values 0.1, 0.2 and 0.3). We kept the batch size constant at 200 samples per batch. Overall, we tried 45 parameter combinations. We will employ 6-fold cross validation (not nested) which means 6 model trainings per parameter combination, which translates to 270 model trainings in total. In each fold we will be using 50,000 samples for training and 10,000 samples for measuring the accuracy (called validation in the code). To enhance the chances to achieve convergence we will perform 250 epochs for each model fitting. The total execution time was ~12 hours on a single processor (Intel Xeon Gold 3.5GHz). This is more or less what we can reasonably run on a CPU. The training speed could be increased using multiprocessing. In fact, the training would be way faster using a specialised deep learning library like PyTorch on GPUs, such as the freely available T4 GPUs on Google Colab.

This code iterates over all hyperparameter values and folds and stores the loss and accuracy for both the training (50,000 samples) and validation (10,000 samples) in a pandas dataframe. The dataframe is used to find the optimal hyperparameters

that produces

optimal parameters: n_hidden_layers=1, n_hidden_nodes=50, learning rate=0.3

best mean cross validation accuracy: 0.944

| n_hidden_layers | 10 | 20 | 30 | 40 | 50 |

|------------------:|---------:|---------:|---------:|---------:|--------:|

| 1 | 0.905217 | 0.927083 | 0.936883 | 0.939067 | 0.9441 |

| 2 | 0.8476 | 0.925567 | 0.933817 | 0.93725 | 0.9415 |

| 3 | 0.112533 | 0.305133 | 0.779133 | 0.912867 | 0.92285 |

We can see that there is little benefit in increasing the number of layers. Perhaps we could have gained slightly better performance using a larger first hidden layer as the hyperparameter tuning hit the bound of 50 nodes. Some mean cross-validation accuracies are quite low that could be indicative of poor convergence (e.g. when using 3 hidden layers with 10 nodes each). We did not investigate further but this would be typically required before concluding on the optimal network geometry. I would expect that allowing for more epochs would increase accuracy further particular with the larger networks.

A final step is to retrain the model with all samples other than the external (hold-out) set that are only used for the final evaluation

The last 5 epochs are

epoch 245: loss_training=0.008 | accuracy_training=0.958 | loss_test=0.009 | accuracy_test=0.946

epoch 246: loss_training=0.008 | accuracy_training=0.958 | loss_test=0.009 | accuracy_test=0.947

epoch 247: loss_training=0.008 | accuracy_training=0.958 | loss_test=0.009 | accuracy_test=0.947

epoch 248: loss_training=0.008 | accuracy_training=0.958 | loss_test=0.009 | accuracy_test=0.946

epoch 249: loss_training=0.008 | accuracy_training=0.958 | loss_test=0.009 | accuracy_test=0.946

We achieved ~95% accuracy with the external (hold-out) test set. This is magical if we consider that we started with a blank piece of paper!

Conclusions

This article demonstrated how we can build a multilayer, feedforward, fully connected neural network from scratch. The network was used for solving a multiclass classification problem. The implementation has been generalised to allow for any number of hidden layers with any number of nodes. This facilitates hyperparameter tuning by varying the number of layers and units in them. However, we need to keep in mind that the loss gradients become smaller and smaller as the depth of the neural network increases. This is known as the vanishing gradient problem and requires using specialised training algorithms once the depth exceeds a certain threshold, which is out of the scope of this article.

Our vanilla implementation of a multilayer neural network has hopefully educational value. Using it in practice would require several improvements though. First of all, overfitting would need to be addressed, by employing some form of drop out. Other improvements, such as the addition of skip-connections and the variation of the learning rate during training, may be beneficial too. In addition, the network architecture itself can be optimised, e.g. by using a convolutional neural network that would be more appropriate for classifying images. Such improvements are best attempted using a specialised library like PyTorch. When developing algorithms from scratch one needs to be wary of the time it takes and where to draw the line so that the endeavour remains educational without being extremely time consuming. I hope this article strikes a good balance in this sense. If you are intrigued I would recommend this book for further study.

LaTeX code of equations used in the article

The equations used in the article can be found in the gist below, in case you would like to render them again.

[ad_2]

Source link