[ad_1]

From drug discovery to species classification, credit scoring to cybersecurity and more, the random forest is a popular and powerful algorithm for modeling our complex world. Its versatility and predictive prowess would seem to require cutting-edge complexity, but if we dig into what a random forest actually is, we see a shockingly simple set of repeating steps.

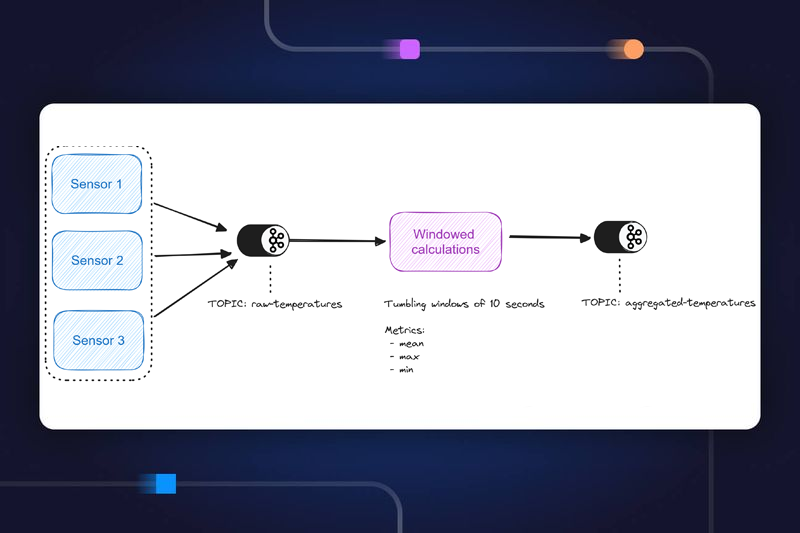

I find that the best way to learn something is to play with it. So to gain an intuition on how random forests work, let’s build one by hand in Python, starting with a decision tree and expanding to the full forest. We’ll see first-hand how flexible and interpretable this algorithm is for both classification and regression. And while this project may sound complicated, there are really only a few core concepts we’ll need to learn: 1) how to iteratively partition data, and 2) how to quantify how well data is partitioned.

Decision tree inference

A decision tree is a supervised learning algorithm that identifies a branching set of binary rules that map features to labels in a dataset. Unlike algorithms like logistic regression where the output is an equation, the decision tree algorithm is nonparametric, meaning it doesn’t make strong assumptions on the relationship between features and labels. This means that decision trees are free to grow in whatever way best partitions their training data, so the resulting structure will vary between datasets.

One major benefit of decision trees is their explainability: each step the tree takes in deciding how to predict a category (for classification) or continuous value (for regression) can be seen in the tree nodes. A model that predicts whether a shopper will buy a product they viewed online, for example, might look like this.

Starting with the root, each node in the tree asks a binary question (e.g., “Was the session length longer than 5 minutes?”) and passes the feature vector to one of two child nodes depending on the…

[ad_2]

Source link