[ad_1]

Diffusion models are a set of generative models that work by adding noise to the training data and then learn to recover the same by reversing the noising process. This process allows these models to achieve state-of-the-art image quality, making them one of the most significant developments in Machine Learning (ML) in the past few years. Their performance, however, is greatly determined by the distribution of the training data (mainly web-scale text-image pairs), which leads to issues like human aesthetic mismatch, biases, and stereotypes.

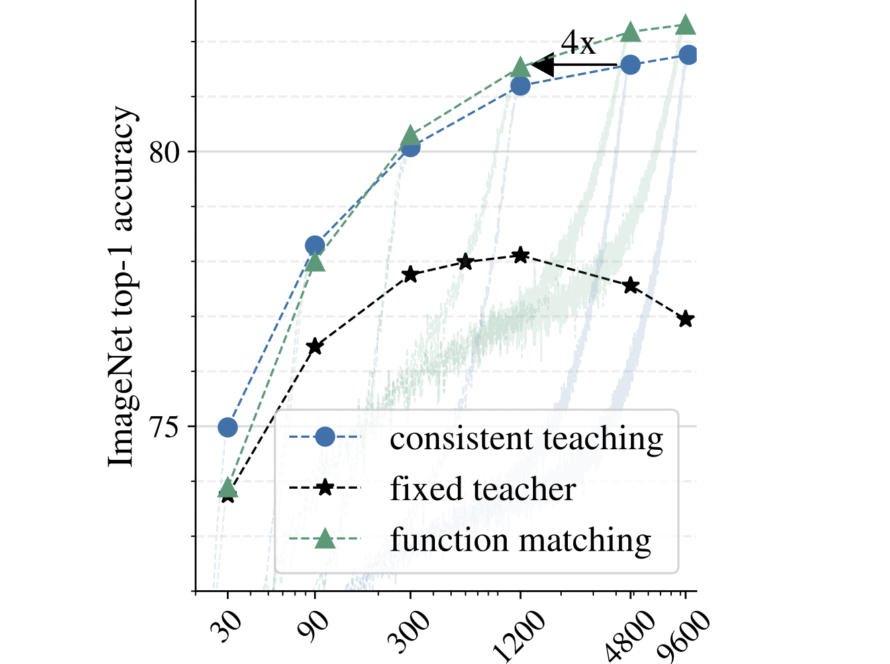

Previous works focus on using curated datasets or intervening in the sampling process to address the abovementioned issues and achieve controllability. However, these methods affect the sampling time of the model without improving its inherent capabilities. In this work, researchers from Pinterest have proposed a reinforcement learning (RL) framework for fine-tuning diffusion models to achieve results that are more aligned with human preferences.

The proposed framework enables training over millions of prompts across diverse tasks. Moreover, to ensure that the model generates diverse outputs, the researchers used a distribution-based reward function for reinforcement learning fine-tuning. Additionally, the researchers also performed multi-task joint training so that the model is better equipped to deal with a diverse set of objectives simultaneously.

For evaluation, the authors considered three separate reward functions – image composition, human preference, and diversity and fairness. They used the ImageReward model to calculate the human preference score, which was then used as the reward during the model’s training. They also compared their framework with various baseline models such as ReFL, RAFT, DRaFT, etc.

- They found that their method is generalizable to all the rewards and got the best rank in terms of human preference. They hypothesized that the ReFL model is influenced by the reward hacking problem (the model over-optimizes a single metric at the cost of overall performance). In contrast, their method is much more robust to these effects.

- The results show that the SDv2 model is biased towards light skin tone for images of dentists and judges, whereas their method has a much more balanced distribution.

- The proposed framework is also able to tackle the problem of compositionality in diffusion models, i.e., generating different compositions of objects in a scene, and performs much better than the SDv2 model.

- Lastly, in terms of multi-reward joint optimization, the model outperforms the base models on all three tasks.

In conclusion, to address the issues with the existing diffusion models, the authors of this research paper have introduced a scalable RL training framework that fine-tunes diffusion models to achieve better results. The method performed significantly better than existing models and demonstrated its superiority in generality, robustness, and the ability to generate diverse images. With this work, the authors aim to inspire future research on this topic to further enhance diffusion models’ capabilities and mitigate significant issues like bias and fairness.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and Google News. Join our 36k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our Telegram Channel

[ad_2]

Source link