[ad_1]

The landscape of image segmentation has been profoundly transformed by the introduction of the Segment Anything Model (SAM), a paradigm known for its remarkable zero-shot segmentation capability. SAM’s deployment across a wide array of applications, from augmented reality to data annotation, underscores its utility. However, SAM’s computational intensity, particularly its image encoder’s demand of 2973 GMACs per image at inference, has limited its application in scenarios where time is of the essence.

The quest to enhance SAM’s efficiency without sacrificing its formidable accuracy has led to the development of models like MobileSAM, EdgeSAM, and EfficientSAM. These models, while reducing computational costs, unfortunately, experienced drops in performance, as depicted in Figure 1. Addressing this challenge, the introduction of EfficientViT-SAM utilizes the EfficientViT architecture to revamp SAM’s image encoder. This adaptation preserves the integrity of SAM’s lightweight prompt encoder and mask decoder architecture, culminating in two variants: EfficientViT-SAM-L and EfficientViT-SAM-XL. These models offer a nuanced trade-off between operational speed and segmentation accuracy, trained end-to-end using the comprehensive SA-1B dataset.

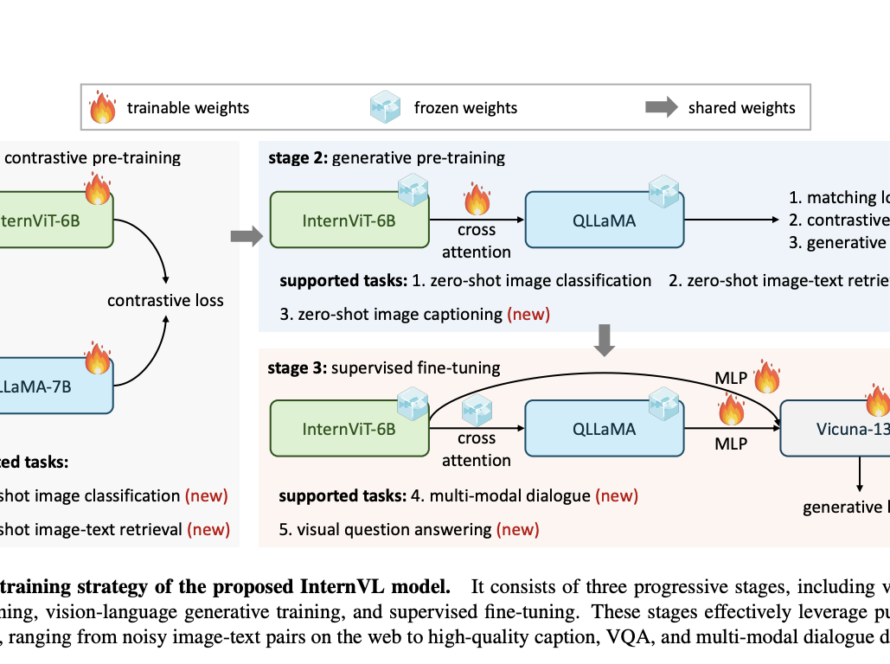

EfficientViT stands at the core of this innovation, a vision transformer model optimized for high-resolution dense prediction tasks. Its unique multi-scale linear attention module replaces traditional softmax attention with ReLU linear attention, significantly reducing computational complexity from quadratic to linear. This efficiency is achieved without compromising the model’s ability to globally perceive and learn multi-scale features, a pivotal enhancement detailed in the original EfficientViT publication.

The architecture of EfficientViT-SAM, particularly the EfficientViT-SAM-XL variant, is meticulously structured into five stages. Early stages employ convolution blocks, while the latter stages integrate EfficientViT modules, culminating in a feature fusion process that feeds into the SAM head, as illustrated in Figure 2. This architectural design ensures a seamless fusion of multi-scale features, enhancing the model’s segmentation capability.

The training process of EfficientViT-SAM is as rigorous as it is innovative. Beginning with the distillation of SAM-ViT-H’s image embeddings into EfficientViT, the model undergoes end-to-end training on the SA-1B dataset. This phase incorporates a mix of box and point prompts, employing a combination of focal and dice loss to fine-tune the model’s performance. The training strategy, including the choice of prompts and loss function, ensures that EfficientViT-SAM not only learns effectively but also adapts to various segmentation scenarios.

EfficientViT-SAM’s excellence is not merely theoretical; its empirical performance, particularly in runtime efficiency and zero-shot segmentation, is compelling. The model demonstrates an acceleration of 17 to 69 times compared to SAM, with a significant throughput advantage despite having more parameters than other acceleration efforts, as shown in Table 1.

The zero-shot segmentation capability of EfficientViT-SAM is evaluated through meticulous tests on COCO and LVIS datasets, employing both single-point and box-prompted instance segmentation. The model’s performance, as detailed in Tables 2 and 4, showcases its superior segmentation accuracy, particularly when utilizing additional point prompts or ground truth bounding boxes.

Moreover, the segmentation in the Wild benchmark further validates EfficientViT-SAM’s robustness in zero-shot segmentation across diverse datasets, with performance results encapsulated in Table 3. The qualitative results, depicted in Figure 3, highlight EfficientViT-SAM’s adeptness at segmenting objects of varying sizes, affirming its versatility and superior segmentation capability.

In conclusion, EfficientViT-SAM successfully merges the speed of EfficientViT into the SAM architecture, resulting in a substantial efficiency gain without sacrificing performance. This opens up possibilities for wider-reaching applications of powerful segmentation models, even in resource-constrained scenarios. To facilitate and encourage further research and development, pre-trained EfficientViT-SAM models have been made open-source.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and Google News. Join our 37k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our Telegram Channel

[ad_2]

Source link