[ad_1]

Image by Author

Gemini is a new model developed by Google, and Bard is becoming usable again. With Gemini, it is now possible to get almost perfect answers to your queries by providing them with images, audio, and text.

In this tutorial, we will learn about the Gemini API and how to set it up on your machine. We will also explore various Python API functions, including text generation and image understanding.

Gemini is a new AI model developed through collaboration between teams at Google, including Google Research and Google DeepMind. It was built specifically to be multimodal, meaning it can understand and work with different types of data like text, code, audio, images, and video.

Gemini is the most advanced and largest AI model developed by Google to date. It has been designed to be highly flexible so that it can operate efficiently on a wide range of systems, from data centers to mobile devices. This means that it has the potential to revolutionize the way in which businesses and developers can build and scale AI applications.

Here are three versions of the Gemini model designed for different use cases:

- Gemini Ultra: Largest and most advanced AI capable of performing complex tasks.

- Gemini Pro: A balanced model that has good performance and scalability.

- Gemini Nano: Most efficient for mobile devices.

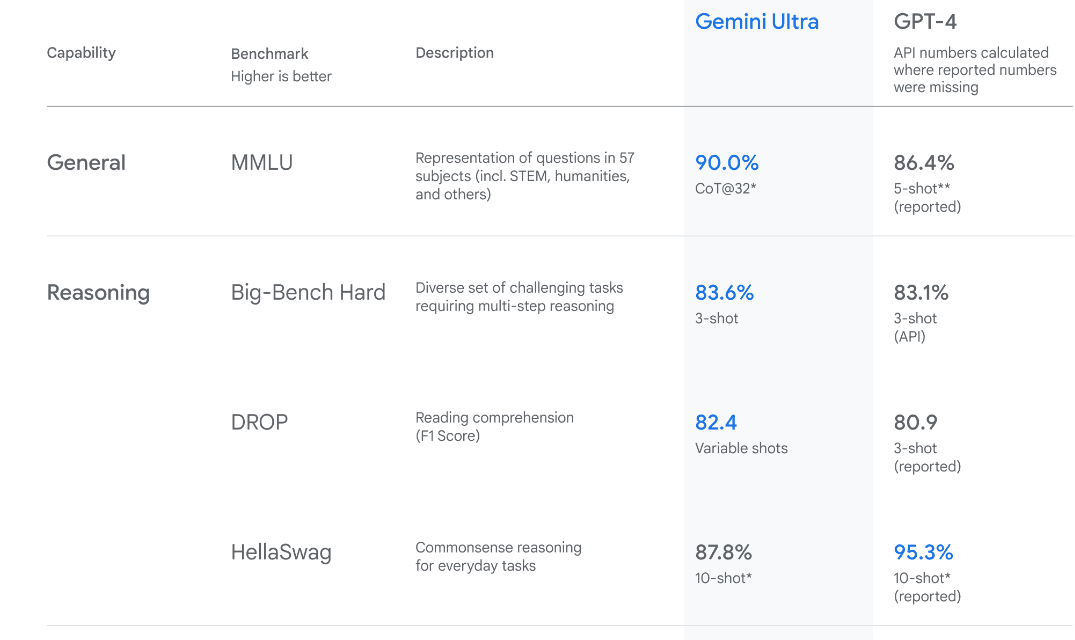

Image from Introducing Gemini

Gemini Ultra has state-of-the-art performance, exceeding the performance of GPT-4 on several metrics. It is the first model to outperform human experts on the Massive Multitask Language Understanding benchmark, which tests world knowledge and problem solving across 57 diverse subjects. This showcases its advanced understanding and problem-solving capabilities.

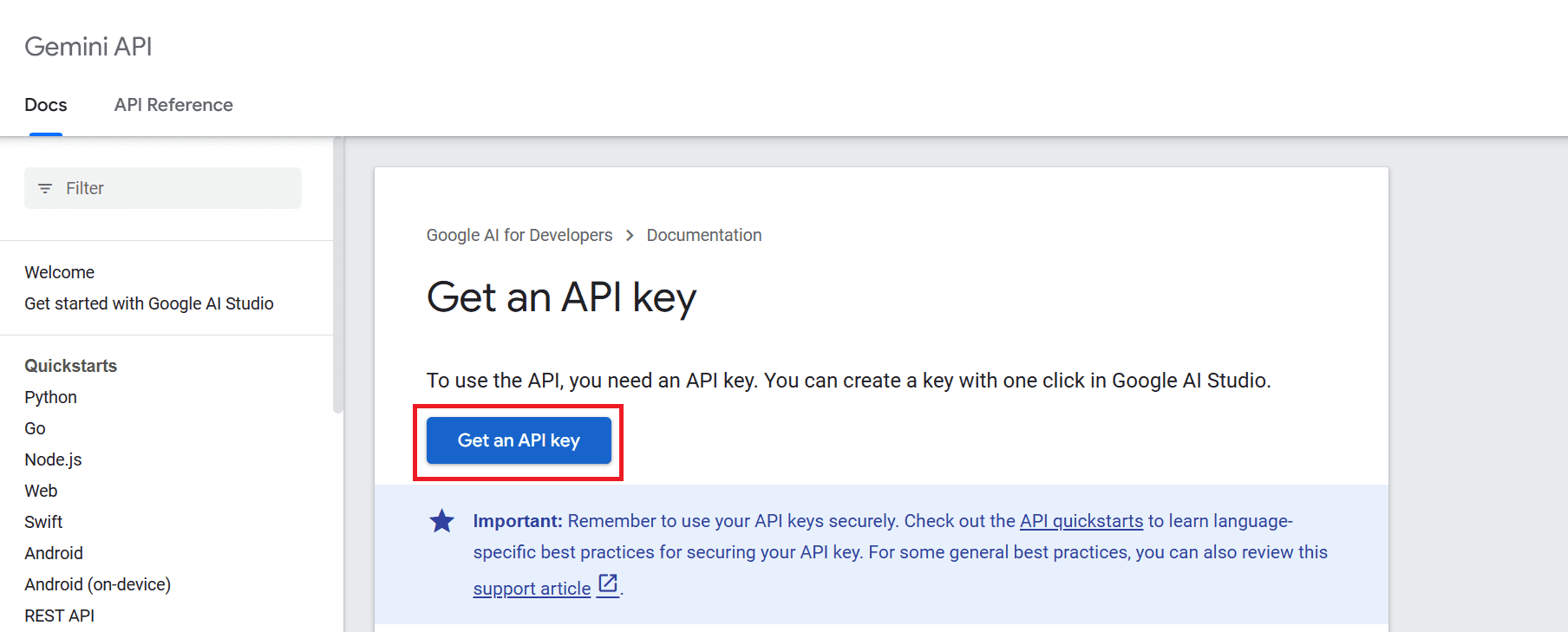

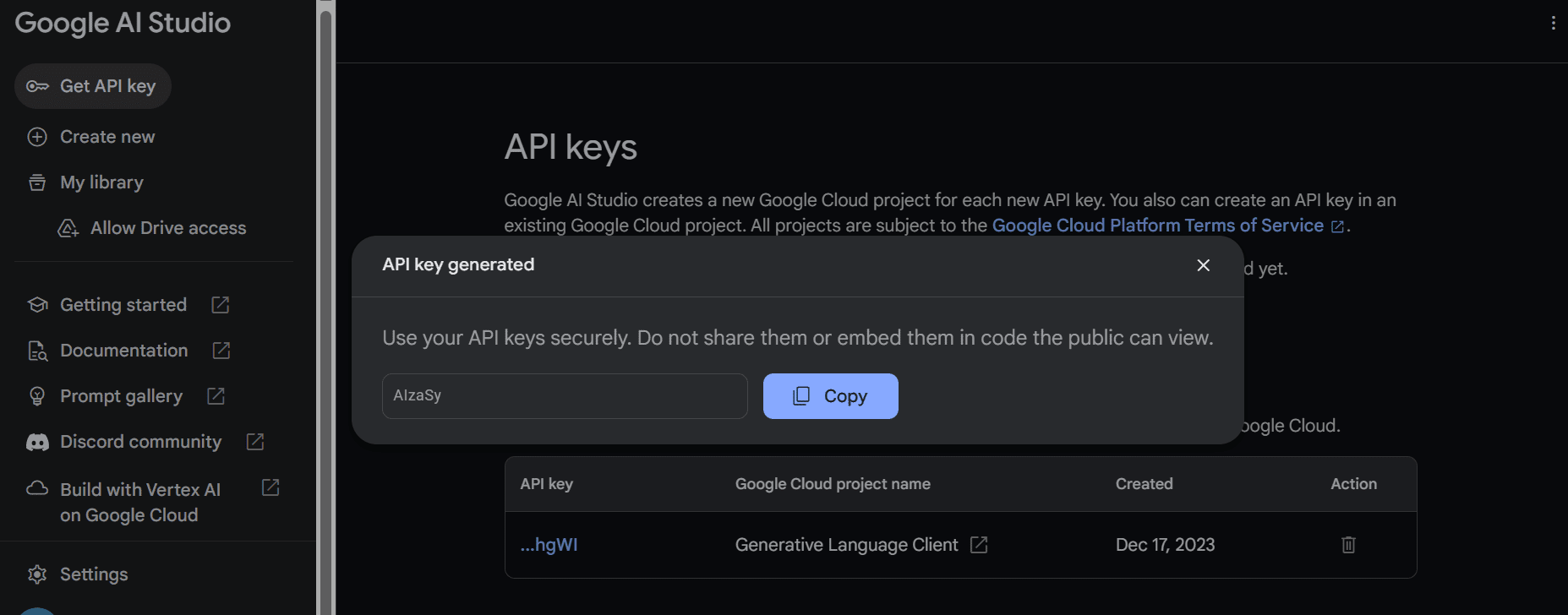

To use the API, we have to first get an API key that you can can from here: https://ai.google.dev/tutorials/setup

After that click on “Get an API key” button and then click on “Create API key in new project”.

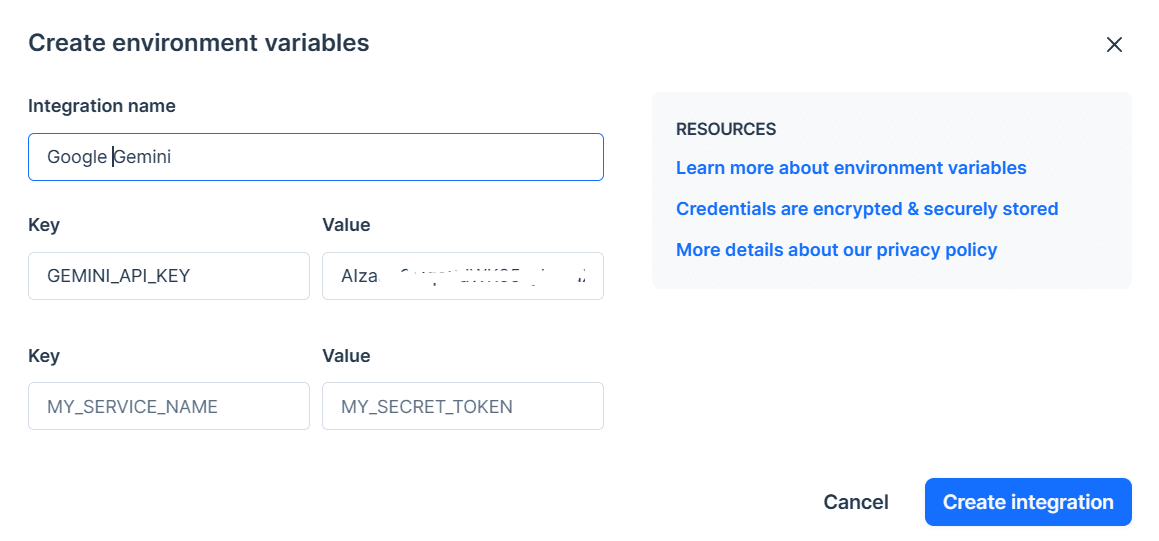

Copy the API key and set it as an environment variable. We are using Deepnote and it is quite easy for us to set the key with the name “GEMINI_API_KEY”. Just go to the integration, scroll down and select environment variables.

In the next step, we will instal the Python API using PIP:

pip install -q -U google-generativeai

After that, we will set the API key to Google’s GenAI and initiate the instance.

import google.generativeai as genai

import os

gemini_api_key = os.environ["GEMINI_API_KEY"]

genai.configure(api_key = gemini_api_key)

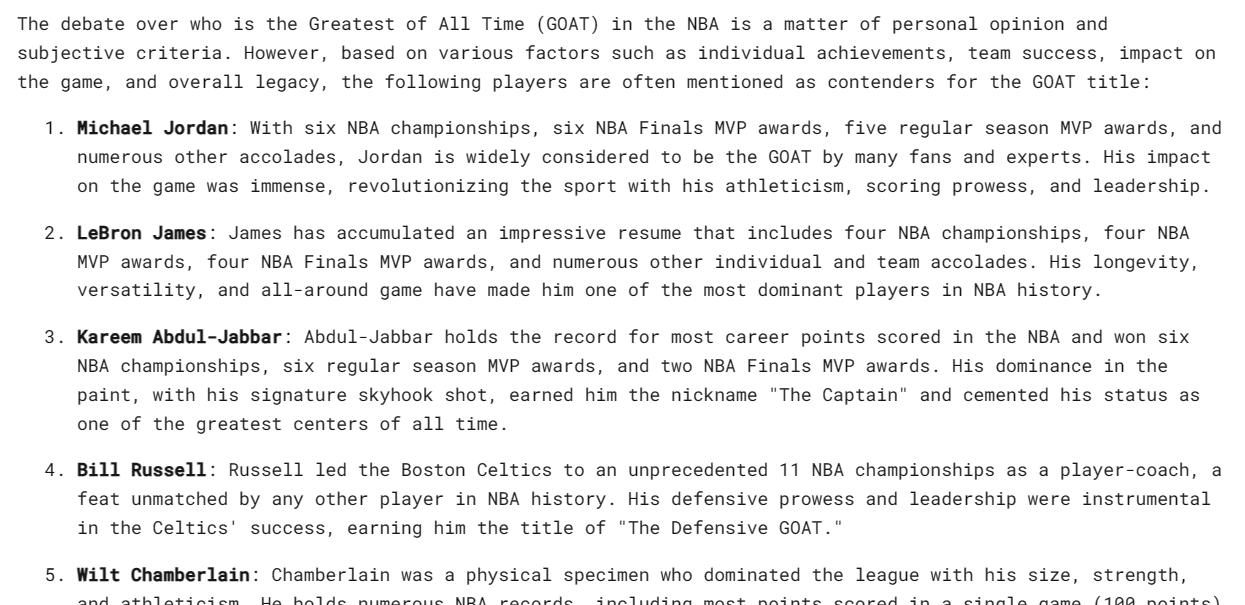

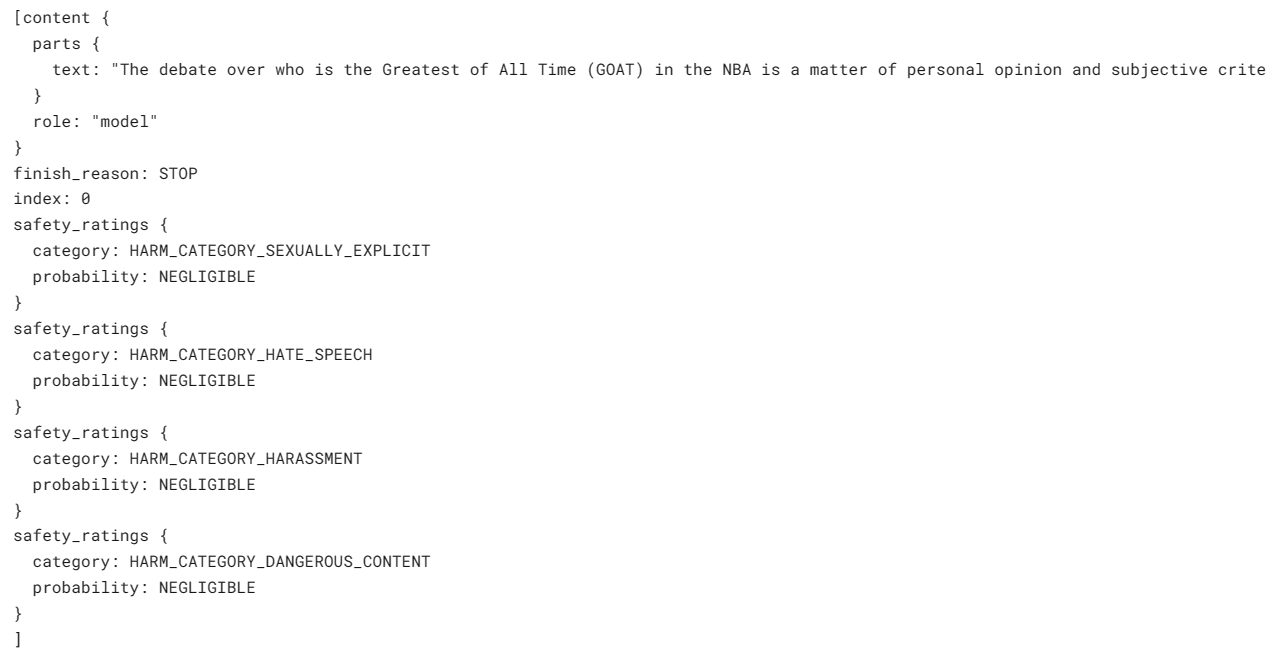

After setting up the API key, using the Gemini Pro model to generate content is simple. Provide a prompt to the `generate_content` function and display the output as Markdown.

from IPython.display import Markdown

model = genai.GenerativeModel('gemini-pro')

response = model.generate_content("Who is the GOAT in the NBA?")

Markdown(response.text)

This is amazing, but I don’t agree with the list. However, I understand that it’s all about personal preference.

Gemini can generate multiple responses, called candidates, for a single prompt. You can select the most suitable one. In our case, we had only one respons.

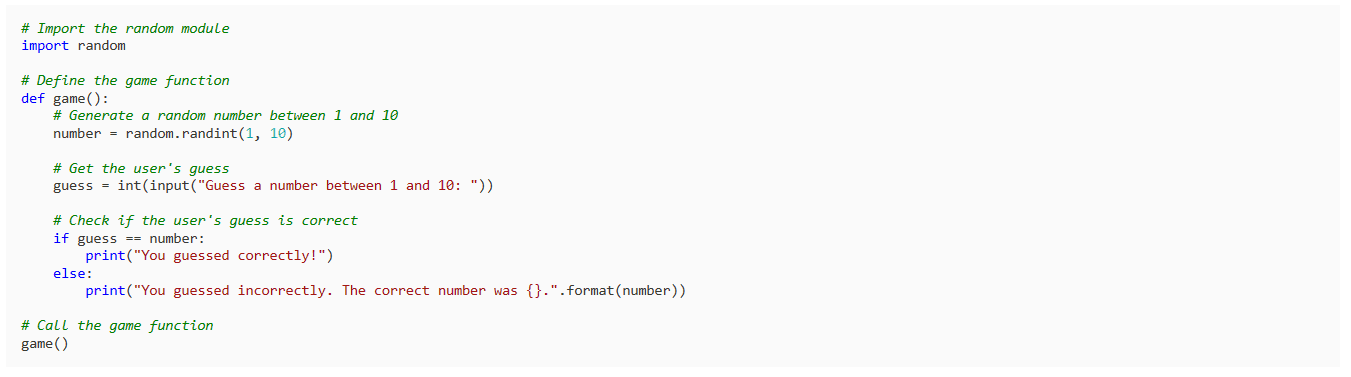

Let’s ask it to write a simple game in Python.

response = model.generate_content("Build a simple game in Python")

Markdown(response.text)

The result is simple and to the point. Most LLMs start to explain the Python code instead of writing it.

You can customize your response using the `generation_config` argument. We are limiting candidate count to 1, adding the stop word “space,” and setting max tokens and temperature.

response = model.generate_content(

'Write a short story about aliens.',

generation_config=genai.types.GenerationConfig(

candidate_count=1,

stop_sequences=['space'],

max_output_tokens=200,

temperature=0.7)

)

Markdown(response.text)

As you can see, the response stopped before the word “space”. Amazing.

You can also use the `stream` argument to stream the response. It is similar to the Anthropic and OpenAI APIs but faster.

model = genai.GenerativeModel('gemini-pro')

response = model.generate_content("Write a Julia function for cleaning the data.", stream=True)

for chunk in response:

print(chunk.text)

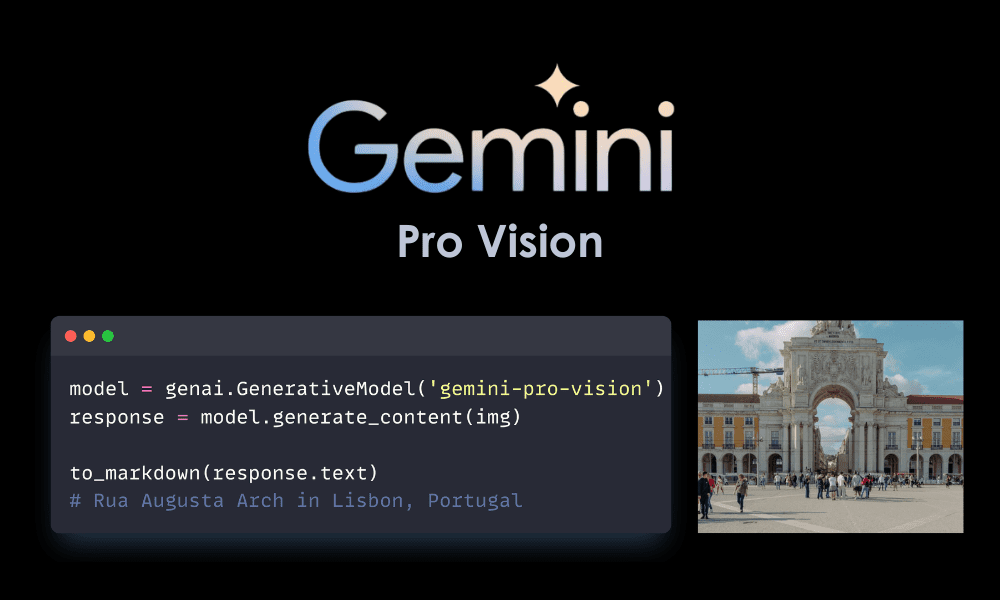

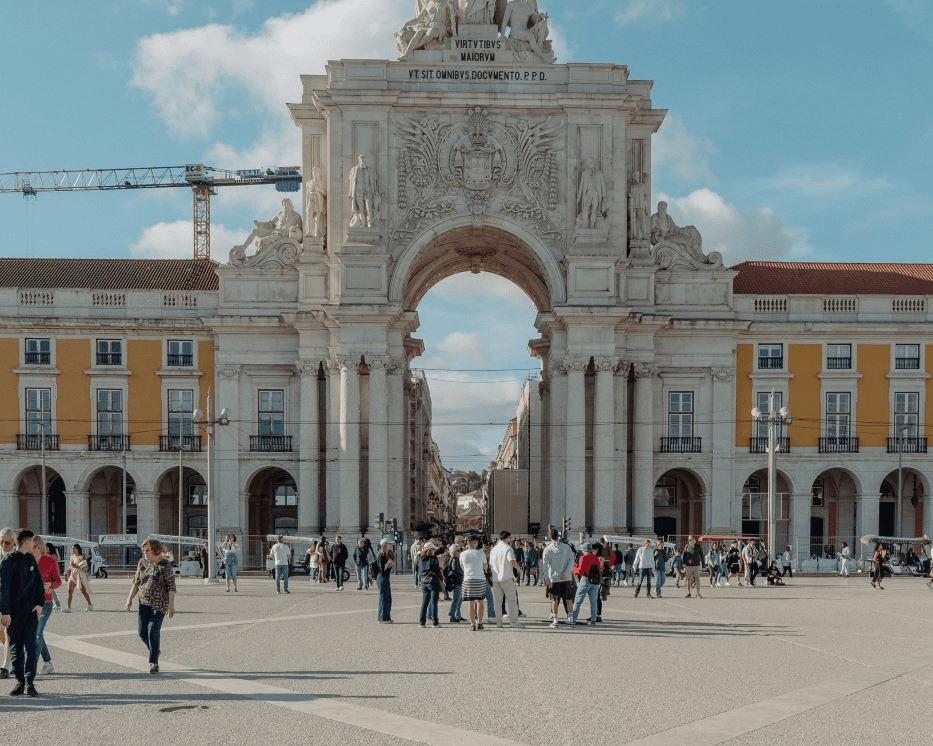

In this section, we will load Masood Aslami’s photo and use it to test the multimodality of Gemini Pro Vision.

Load the images to the `PIL` and display it.

import PIL.Image

img = PIL.Image.open('images/photo-1.jpg')

img

We have a high quality photo of Rua Augusta Arch.

Let’s load the Gemini Pro Vision model and provide it with the image.

model = genai.GenerativeModel('gemini-pro-vision')

response = model.generate_content(img)

Markdown(response.text)

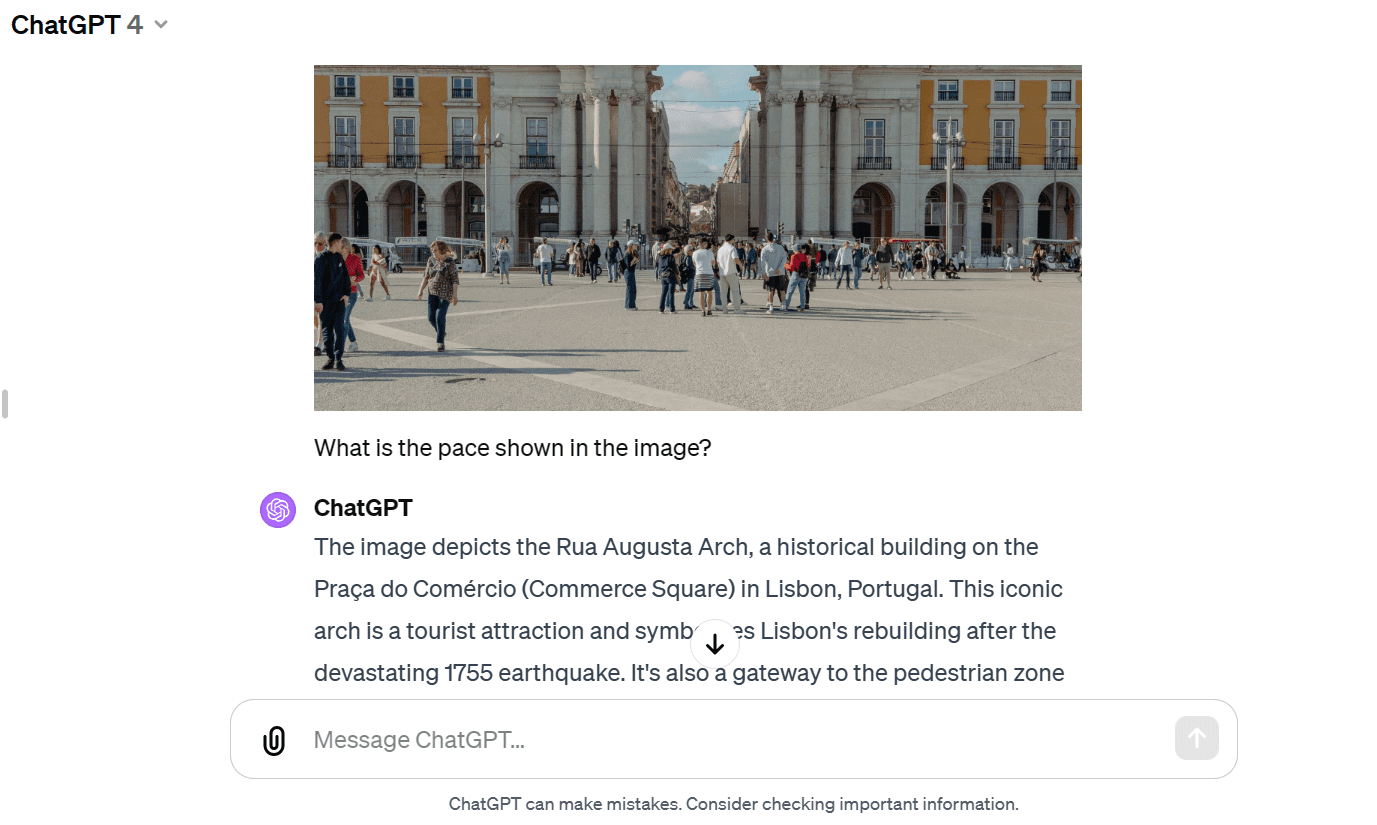

The model accurately identified the palace and provided additional information about its history and architecture.

Let’s provide the same image to the GPT-4 and ask it about the image. Both models have provided almost similar answers. But I like the GPT-4 response more.

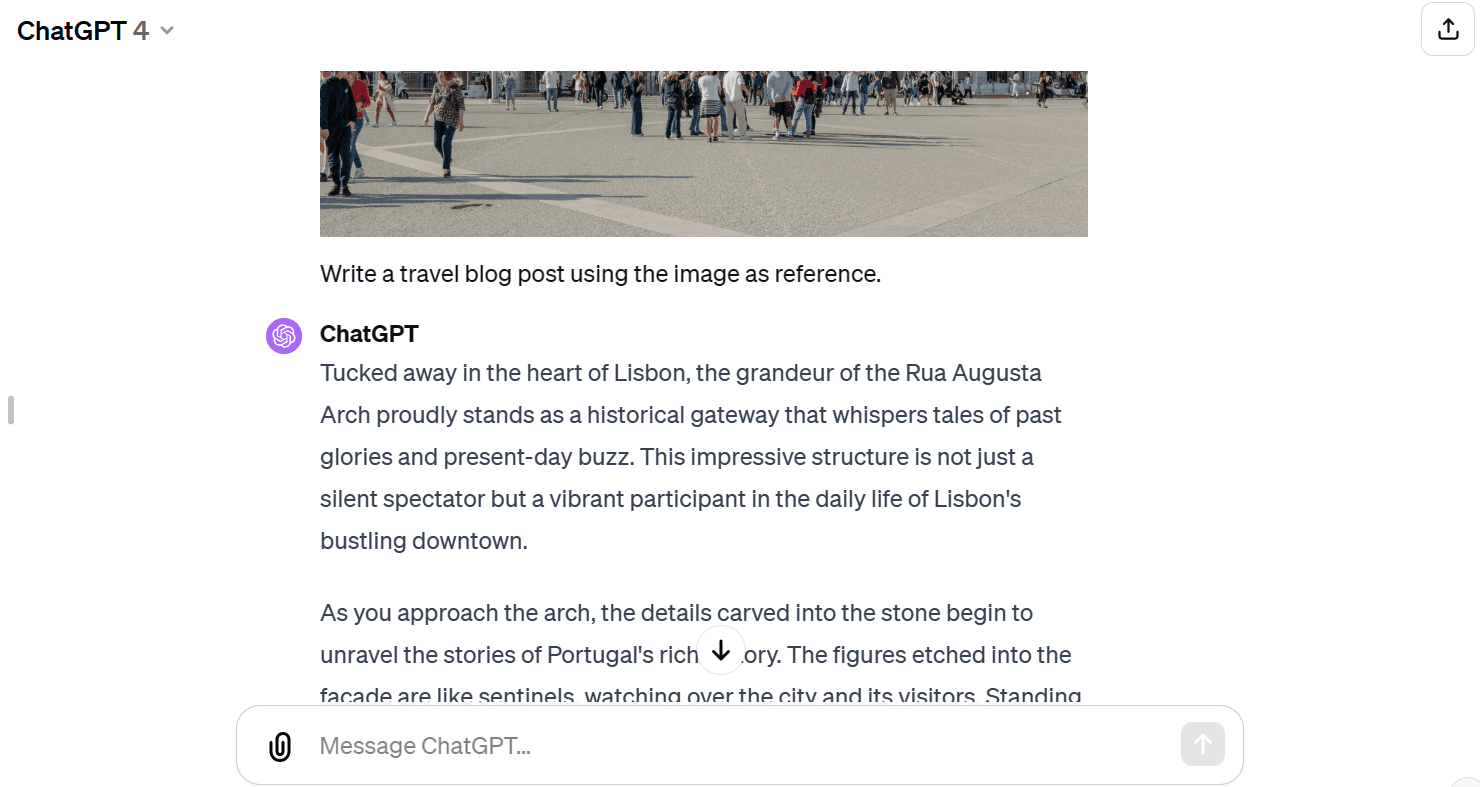

We will now provide text and the image to the API. We have asked the vision model to write a travel blog using the image as reference.

response = model.generate_content(["Write a travel blog post using the image as reference.", img])

Markdown(response.text)

It has provided me with a short blog. I was expecting longer format.

Compared to GPT-4, the Gemini Pro Vision model has struggled to generate a long-format blog.

We can set up the model to have a back-and-forth chat session. This way, the model remembers the context and response using the previous conversations.

In our case, we have started the chat session and asked the model to help me get started with the Dota 2 game.

model = genai.GenerativeModel('gemini-pro')

chat = model.start_chat(history=[])

chat.send_message("Can you please guide me on how to start playing Dota 2?")

chat.history

As you can see, the `chat` object is saving the history of the user and mode chat.

We can also display them in a Markdown style.

for message in chat.history:

display(Markdown(f'**{message.role}**: {message.parts[0].text}'))

Let’s ask the follow up question.

chat.send_message("Which Dota 2 heroes should I start with?")

for message in chat.history:

display(Markdown(f'**{message.role}**: {message.parts[0].text}'))

We can scroll down and see the entire session with the model.

Embedding models are becoming increasingly popular for context-aware applications. The Gemini embedding-001 model allows words, sentences, or entire documents to be represented as dense vectors that encode semantic meaning. This vector representation makes it possible to easily compare the similarity between different pieces of text by comparing their corresponding embedding vectors.

We can provide the content to `embed_content` and convert the text into embeddings. It is that simple.

output = genai.embed_content(

model="models/embedding-001",

content="Can you please guide me on how to start playing Dota 2?",

task_type="retrieval_document",

title="Embedding of Dota 2 question")

print(output['embedding'][0:10])

[0.060604308, -0.023885584, -0.007826327, -0.070592545, 0.021225851, 0.043229062, 0.06876691, 0.049298503, 0.039964676, 0.08291664]

We can convert multiple chunks of text into embeddings by passing a list of strings to the ‘content’ argument.

output = genai.embed_content(

model="models/embedding-001",

content=[

"Can you please guide me on how to start playing Dota 2?",

"Which Dota 2 heroes should I start with?",

],

task_type="retrieval_document",

title="Embedding of Dota 2 question")

for emb in output['embedding']:

print(emb[:10])

[0.060604308, -0.023885584, -0.007826327, -0.070592545, 0.021225851, 0.043229062, 0.06876691, 0.049298503, 0.039964676, 0.08291664]

[0.04775657, -0.044990525, -0.014886052, -0.08473655, 0.04060122, 0.035374347, 0.031866882, 0.071754575, 0.042207796, 0.04577447]

If you’re having trouble reproducing the same result, check out my Deepnote workspace.

There are so many advanced functions that we didn’t cover in this introductory tutorial. You can learn more about the Gemini API by going to the Gemini API: Quickstart with Python.

In this tutorial, we have learned about Gemini and how to access the Python API to generate responses. In particular, we have learned about text generation, visual understanding, streaming, conversation history, custom output, and embeddings. However, this just scratches the surface of what Gemini can do.

Feel free to share with me what you have built using the free Gemini API. The possibilities are limitless.

Abid Ali Awan (@1abidaliawan) is a certified data scientist professional who loves building machine learning models. Currently, he is focusing on content creation and writing technical blogs on machine learning and data science technologies. Abid holds a Master’s degree in Technology Management and a bachelor’s degree in Telecommunication Engineering. His vision is to build an AI product using a graph neural network for students struggling with mental illness.

[ad_2]

Source link