[ad_1]

· IaC Self-Service

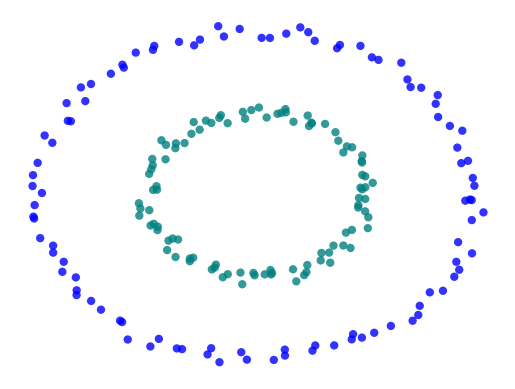

· High-Level Deployment Diagram

· Overview of Pipelines

· Infrastructure Pipeline

∘ terraform-aws-modules

∘ Implementation Prerequisites

∘ Step 1: Create GitHub environments

∘ Step 2: Add infrastructure pipeline code

∘ Step 3: Add GitHub Actions workflow for infrastructure pipeline

∘ Step 4: Kick off infrastructure pipeline

· Application Pipeline (CI/CD)

∘ Step 1: Containerize the app if it’s not yet containerized

∘ Step 2: Add GitHub Actions workflow for CI/CD

∘ Step 3: Kick off CI/CD pipeline

∘ Step 4: Launch our RAGs app

· Destroy and Cleanup

· Key End-to-end Implementation Points

· Summary

LLM applications, when developed to use third-party hosted LLMs such as OpenAI, do not require MLOps overhead. Such containerized LLM-powered apps or microservices can be deployed with DevOps practices. In this article, let’s explore how to deploy our LLM app to a cloud provider such as AWS, fully automated with infrastructure and application pipelines. LlamaIndex has a readily made RAGs chatbot for the community. Let’s use RAGs as the sample app to deploy.

IaC, short for Infrastructure as Code, automates infrastructure provisioning, ensuring that configurations are consistent and repeatable. There are many tools to accomplish IaC. We will focus on HashiCorp’s Terraform in this article.

The primary purpose of IaC self-service is to empower developers with more access, control, and ownership over their pipelines to boost productivity.

For those interested, I wrote a 5-part series on DevOps self-service model about a year ago to detail all aspects related to a DevOps self-service model.

[ad_2]

Source link