[ad_1]

Big Vision Language Models (VLMs) trained to comprehend vision have shown viability in broad scenarios like visual question answering, visual grounding, and optical character recognition, capitalizing on the strength of Large Language Models (LLMs) in general knowledge of the world.

Humans mark or process the provided photos for convenience and rigor to address the intricate visual challenges; this process is known as manipulation. In the initial training round, most VLMs learned a plethora of intrinsic multimodal abilities, such as grounding boxes and word recognition. Models can execute evidential visual reasoning for issue-solving by mimicking basic human-like behaviors (e.g., cropping, zooming in). However, this approach for model training is not used due to two significant obstacles.

- The first and foremost requirement is producing copious amounts of training data using the evidential visual reasoning paths from preexisting language instruction-answer pairs.

- Training VLMs of dedicated architectures while maintaining their preset capabilities is challenging because building a general mechanism with varied manipulations is difficult.

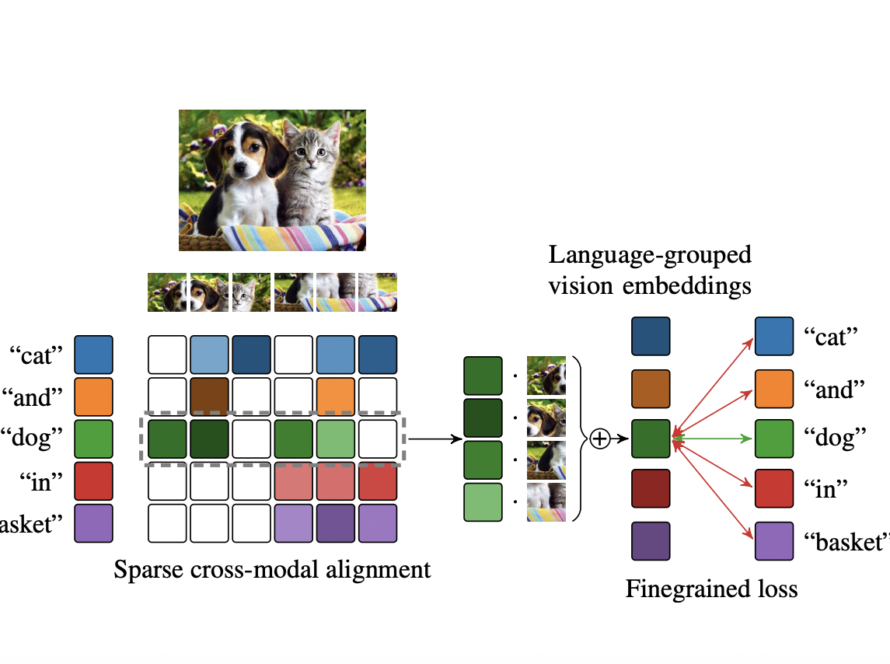

A new study by Tsinghua University and Zhipu AI explores Chain of Manipulations (CoM), a generic mechanism that allows VLMs to execute evidential visual reasoning. VLMs acquire various visual contents (e.g., boxes, messages, images) by applying a sequence of manipulations to the visual input. They initially established an automated data creation platform based on the preexisting image-question-answer corpus. A linguistic annotator with access to a set of manipulations is asked to supply reasoning steps for a specific query, and basic visual tools are used to get the corresponding returns that the manipulations have asked for. Next, the researchers find all the possible manipulation returns and do a traverse on the resulting tree to find all the possible paths that, when combined, lead to the correct answer.

To build general and reasoning multimodal skills, they offer CogCoM, a 17B VLM trained with a memory-based compatible architecture and a fusion of four categories of data based on the produced data. To arrive at its conclusion, the model uses reasoning to actively adopt various modifications to gain visual contents (such as the new picture img1) and referential regions bbx1 and bbx2. They also present a testbed with detailed visual issues involving reasoning processes and a key points-aware measure to investigate the accuracy of both the final result and the solving process since evaluation resources are scarce.

The team carries out comprehensive trials on eight benchmarks spanning three classes of abilities: visual grounding (RefCOCO, RefCOCO+, and RefCOCOg), hallucination validation (POPE), and a suggested reasoning examination benchmark (AutoCoM-test). The outcomes demonstrate that methodology consistently provides competitive or better performance. According to the inquiry on the proposed testbed, by combining the reasoning chains produced, CogCoM quickly reaches competitive performance with only a few training steps.

The team discovered that the language solution processes lack variety and that visual tools aren’t always accurate, leading to many unfavorable paths (although making good use of them would be useful). They recommend highlighting these restrictions with dedicated reminders and enhanced visual aids. Additionally, their present model may have performance drops because it re-inputs the altered photos using strict instructions. Incorporating the physical manipulations into the vector space calculations is anticipated to enhance this.

The researchers believe that the suggested visual reasoning process may accelerate VLM development in the area of complicated visual problem-solving. Furthermore, the data generation system that has been introduced has the potential to be used in various training scenarios, which could help advance data-driven machine learning.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and Google News. Join our 36k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our Telegram Channel

Dhanshree Shenwai is a Computer Science Engineer and has a good experience in FinTech companies covering Financial, Cards & Payments and Banking domain with keen interest in applications of AI. She is enthusiastic about exploring new technologies and advancements in today’s evolving world making everyone’s life easy.

[ad_2]

Source link