[ad_1]

Image by Author | Midjourney & Canva

Pandas offers various functions that enable users to clean and analyze data. In this article, we will get into some of the key Pandas functions necessary for extracting valuable insights from your data. These functions will equip you with the skills needed to transform raw data into meaningful information.

Data Loading

Loading data is the first step of data analysis. It allows us to read data from various file formats into a Pandas DataFrame. This step is crucial for accessing and manipulating data within Python. Let’s explore how to load data using Pandas.

import pandas as pd

# Loading pandas from CSV file

data = pd.read_csv('data.csv')

This code snippet imports the Pandas library and uses the read_csv() function to load data from a CSV file. By default, read_csv() assumes that the first row contains column names and uses commas as the delimiter.

Data Inspection

We can conduct data inspection by examining key attributes such as the number of rows and columns and summary statistics. This helps us gain a comprehensive understanding of the dataset and its characteristics before proceeding with further analysis.

df.head(): It returns the first five rows of the DataFrame by default. It’s useful for inspecting the top part of the data to ensure it’s loaded correctly.

A B C

0 1.0 5.0 10.0

1 2.0 NaN 11.0

2 NaN NaN 12.0

3 4.0 8.0 12.0

4 5.0 8.0 12.0

df.tail(): It returns the last five rows of the DataFrame by default. It’s useful for inspecting the bottom part of the data.

A B C

1 2.0 NaN 11.0

2 NaN NaN 12.0

3 4.0 8.0 12.0

4 5.0 8.0 12.0

5 5.0 8.0 NaN

df.info(): This method provides a concise summary of the DataFrame. It includes the number of entries, column names, non-null counts, and data types.

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 6 entries, 0 to 5

Data columns (total 3 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 A 5 non-null float64

1 B 4 non-null float64

2 C 5 non-null float64

dtypes: float64(3)

memory usage: 272.0 bytes

df.describe(): This generates descriptive statistics for numerical columns in the DataFrame. It includes count, mean, standard deviation, min, max, and the quartile values (25%, 50%, 75%).

A B C

count 5.000000 4.000000 5.000000

mean 3.400000 7.250000 11.400000

std 1.673320 1.258306 0.547723

min 1.000000 5.000000 10.000000

25% 2.000000 7.000000 11.000000

50% 4.000000 8.000000 12.000000

75% 5.000000 8.000000 12.000000

max 5.000000 8.000000 12.000000

Data Cleaning

Data cleaning is a crucial step in the data analysis process as it ensures the quality of the dataset. Pandas offers a variety of functions to address common data quality issues such as missing values, duplicates, and inconsistencies.

df.dropna(): This is used to remove any rows that contain missing values.

Example: clean_df = df.dropna()

df.fillna():This is used to replace missing values with the mean of their respective columns.

Example: filled_df = df.fillna(df.mean())

df.isnull(): This identifies the missing values in your dataframe.

Example: missing_values = df.isnull()

Data Selection and Filtering

Data selection and filtering are essential techniques for manipulating and analyzing data in Pandas. These operations allow us to extract specific rows, columns, or subsets of data based on certain conditions. This makes it easier to focus on relevant information and perform analysis. Here’s a look at various methods for data selection and filtering in Pandas:

df[‘column_name’]: It selects a single column.

Example: df[“Name”]

0 Alice

1 Bob

2 Charlie

3 David

4 Eva

Name: Name, dtype: object

df[[‘col1’, ‘col2’]]: It selects multiple columns.

Example: df["Name, City"]

0 Alice

1 Bob

2 Charlie

3 David

4 Eva

Name: Name, dtype: object

df.iloc[]: It accesses groups of rows and columns by integer position.

Example: df.iloc[0:2]

Name Age

0 Alice 24

1 Bob 27

Data Aggregation and Grouping

It is crucial to aggregate and group data in Pandas for data summarization and analysis. These operations allow us to transform large datasets into meaningful insights by applying various summary functions such as mean, sum, count, etc.

df.groupby(): Groups data based on specified columns.

Example: df.groupby(['Year']).agg({'Population': 'sum', 'Area_sq_miles': 'mean'})

Population Area_sq_miles

Year

2020 15025198 332.866667

2021 15080249 332.866667

df.agg(): Provides a way to apply multiple aggregation functions at once.

Example: df.groupby(['Year']).agg({'Population': ['sum', 'mean', 'max']})

Population

sum mean max

Year

2020 15025198 5011732.666667 6000000

2021 15080249 5026749.666667 6500000

Data Merging and Joining

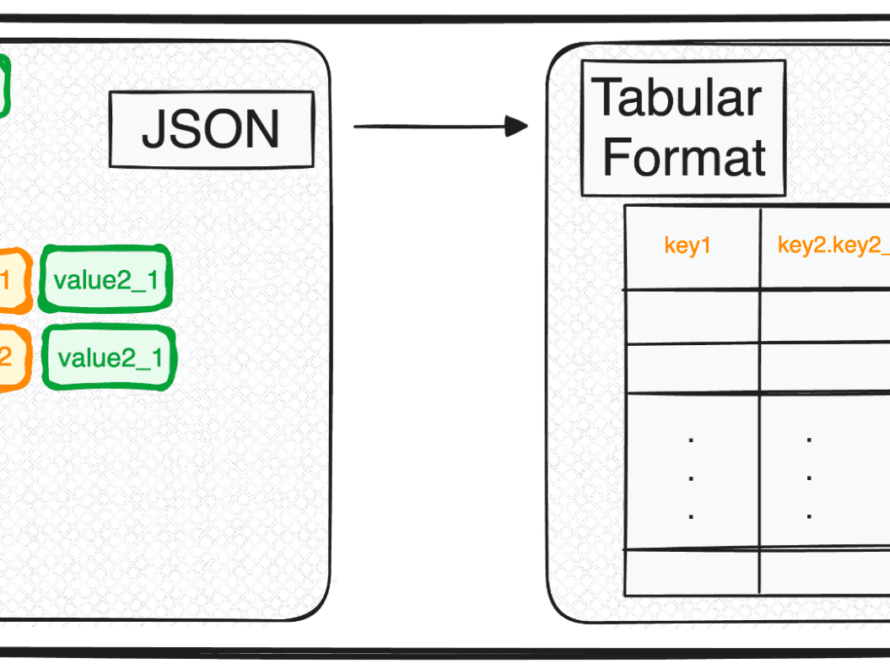

Pandas provides several powerful functions to merge, concatenate, and join DataFrames, enabling us to integrate data efficiently and effectively.

pd.merge(): Combines two DataFrames based on a common key or index.

Example: merged_df = pd.merge(df1, df2, on='A')

pd.concat(): Concatenates DataFrames along a particular axis (rows or columns).

Example: concatenated_df = pd.concat([df1, df2])

Time Series Analysis

Time series analysis with Pandas involves using the Pandas library to visualize and analyze time series data. Pandas provides data structures and functions specially designed for working with time series data.

to_datetime(): Converts a column of strings to datetime objects.

Example: df['date'] = pd.to_datetime(df['date'])

date value

0 2022-01-01 10

1 2022-01-02 20

2 2022-01-03 30

set_index(): Sets a datetime column as the index of the DataFrame.

Example: df.set_index('date', inplace=True)

date value

2022-01-01 10

2022-01-02 20

2022-01-03 30

shift(): Shifts the index of the time series data forwards or backward by a specified number of periods.

Example: df_shifted = df.shift(periods=1)

date value

2022-01-01 NaN

2022-01-02 10.0

2022-01-03 20.0

Conclusion

In this article, we have covered some of the Pandas functions that are essential for data analysis. You can seamlessly handle missing values, remove duplicates, replace specific values, and perform several other data manipulation tasks by mastering these tools. Moreover, we explored advanced techniques such as data aggregation, merging, and time series analysis.

Jayita Gulati is a machine learning enthusiast and technical writer driven by her passion for building machine learning models. She holds a Master’s degree in Computer Science from the University of Liverpool.

[ad_2]

Source link