[ad_1]

Additionally, Gaussian splatting doesn’t involve any neutral network at all. There isn’t even a small MLP, nothing “neural”, a scene is essentially just a set of points in space. This in itself is already an attention grabber. It is quite refreshing to see such a method gaining popularity in our AI-obsessed world with research…

[ad_1]

The recent exponential advances in natural language processing capabilities from large language models (LLMs) have stirred tremendous excitement about their potential to achieve human-level intelligence. Their ability to produce remarkably coherent text and engage in dialogue after exposure to vast datasets seems to point towards flexible, general purpose reasoning skills. However, a growing chorus…

[ad_1]

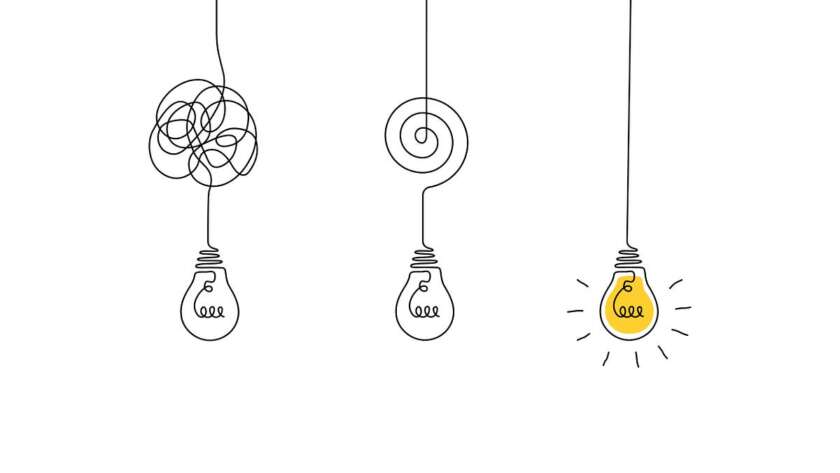

When I began my data science journey in grad school, I had a naive view of the discipline. Namely, I was hyper-focused on learning tools and technologies (e.g. LSTM, SHAP, VAE, SOM, SQL, etc.) While a technical foundation is necessary to be a successful data scientist, focusing too much on tools creates the “Hammer…

[ad_1]

Math behind this parameter efficient finetuning method Fine-tuning large pre-trained models is computationally challenging, often involving adjustment of millions of parameters. This traditional fine-tuning approach, while effective, demands substantial computational resources and time, posing a bottleneck for adapting these models to specific tasks. LoRA presented an effective solution to this problem by decomposing the…

[ad_1]

1. Choosing a Chatbot As simple as this one may sound, it is far from a trivial question. The options are manifold and include choosing to build your own chatbot using open-sourced code.[1] Using one of the gazillion chatbot APIs offered on the market, that allow you the simplest and quickest ready-set-go set-up.[2] Finetuning…

[ad_1]

Social media spam as a case study Photo by Nong on UnsplashDisclaimer: the examples in this post are for illustrative purposes and are not commentary on any specific content policy at any specific company. All views expressed in this article are mine and do not reflect my employer. Why is there any spam on…

[ad_1]

GENERATIVE AI A step-by-step tutorial on query SQL databases with human language Image by the author (generated via Midjourney)Many businesses have a lot of proprietary data stored in their databases. If there’s a virtual agent that understands human language and can query these databases, it opens up big opportunities for these businesses. Think of…

[ad_1]

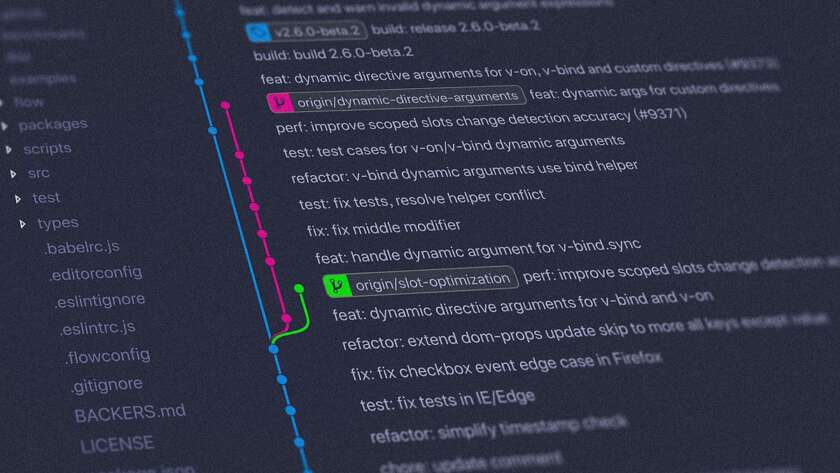

Photo by Yancy Min on UnsplashA hands-on guide for adding a motivational GitHub action to your repository I’ll start with a confession: as a software engineer, I hated and avoided writing tests for years. I came across so many dusty projects — some with no tests at all, others that had tests but those…

[ad_1]

Splitting text, the simple way (Image generated by author w. Dall-E 3)When preparing data for embedding and retrieval in a RAG system, splitting the text into appropriately sized chunks is crucial. This process is guided by two main factors, Model Constraints and Retrieval Effectiveness. Model Constraints Embedding models have a maximum token length for…

[ad_1]

A Comprehensive Guide to MRI Analysis through Deep Learning models in PyTorch Photo by Olga Rai on Adobe Stock.First of all, I’d like to introduce myself. My name is Carla Pitarch, and I am a PhD candidate in AI. My research centers on developing an automated brain tumor grade classification system by extracting information…